Posterior Control of Blackbox Generation

Xiang Lisa Li, Alexander Rush

Machine Learning for NLP Long Paper

Session 4B: Jul 6

(18:00-19:00 GMT)

Session 5B: Jul 6

(21:00-22:00 GMT)

Abstract:

Text generation often requires high-precision output that obeys task-specific rules. This fine-grained control is difficult to enforce with off-the-shelf deep learning models. In this work, we consider augmenting neural generation models with discrete control states learned through a structured latent-variable approach. Under this formulation, task-specific knowledge can be encoded through a range of rich, posterior constraints that are effectively trained into the model. This approach allows users to ground internal model decisions based on prior knowledge, without sacrificing the representational power of neural generative models. Experiments consider applications of this approach for text generation. We find that this method improves over standard benchmarks, while also providing fine-grained control.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

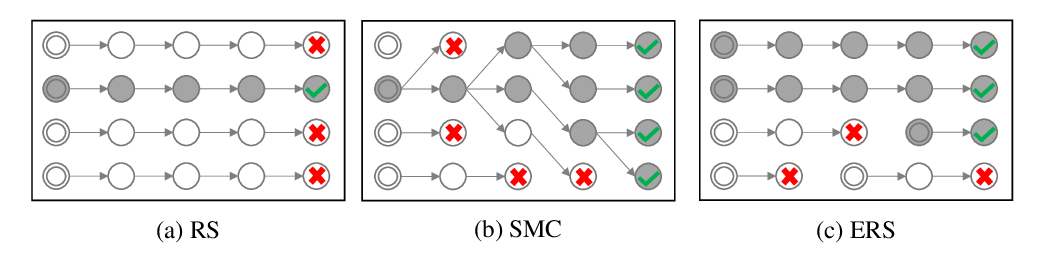

Do you have the right scissors? Tailoring Pre-trained Language Models via Monte-Carlo Methods

Ning Miao, Yuxuan Song, Hao Zhou, Lei Li,

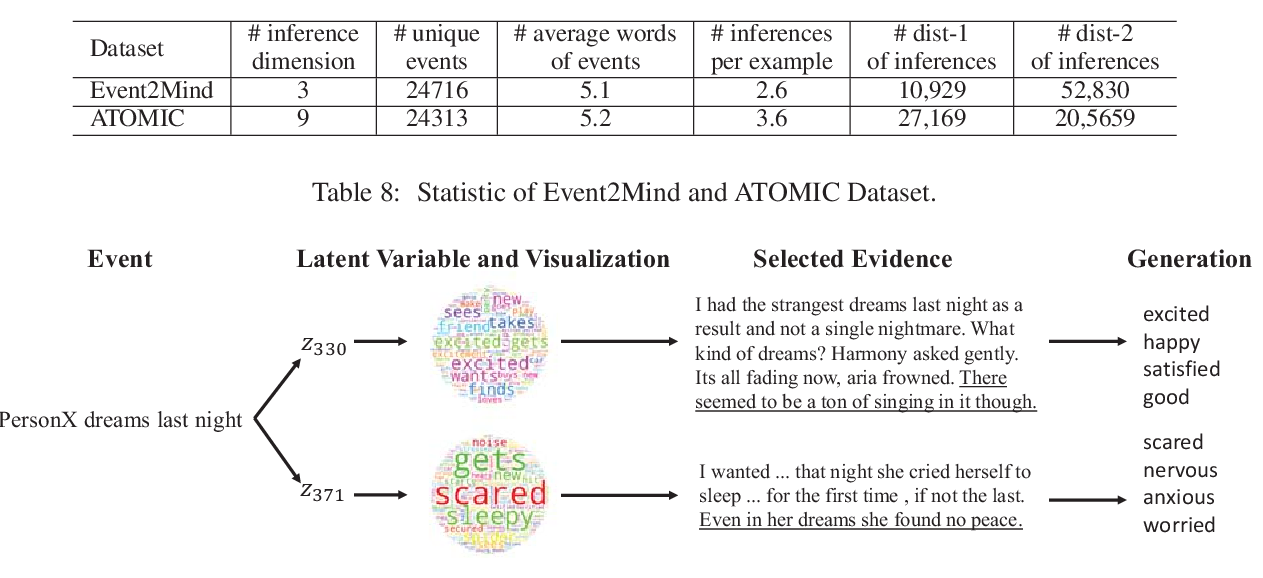

Evidence-Aware Inferential Text Generation with Vector Quantised Variational AutoEncoder

Daya Guo, Duyu Tang, Nan Duan, Jian Yin, Daxin Jiang, Ming Zhou,

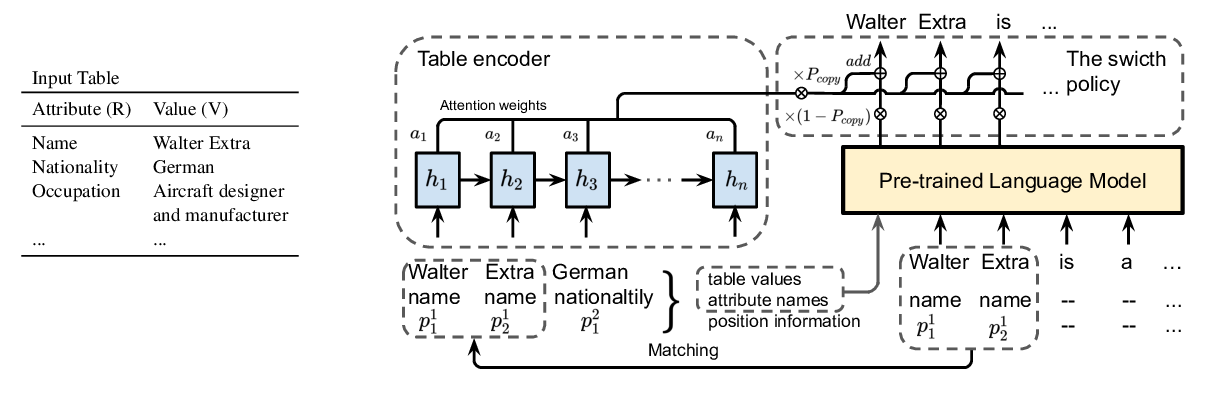

Few-Shot NLG with Pre-Trained Language Model

Zhiyu Chen, Harini Eavani, Wenhu Chen, Yinyin Liu, William Yang Wang,

Learning Implicit Text Generation via Feature Matching

Inkit Padhi, Pierre Dognin, Ke Bai, Cícero Nogueira dos Santos, Vijil Chenthamarakshan, Youssef Mroueh, Payel Das,