Mitigating Gender Bias Amplification in Distribution by Posterior Regularization

Shengyu Jia, Tao Meng, Jieyu Zhao, Kai-Wei Chang

Ethics and NLP Short Paper

Session 6A: Jul 7

(05:00-06:00 GMT)

Session 10B: Jul 7

(21:00-22:00 GMT)

Abstract:

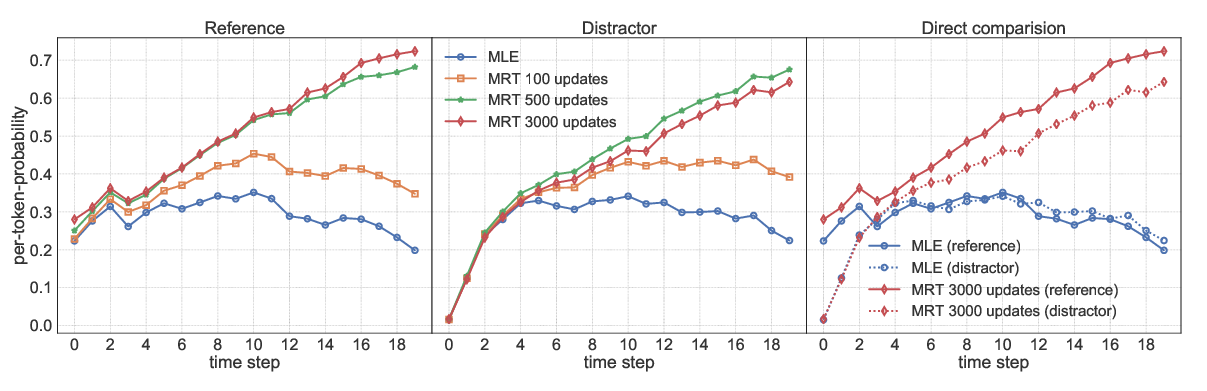

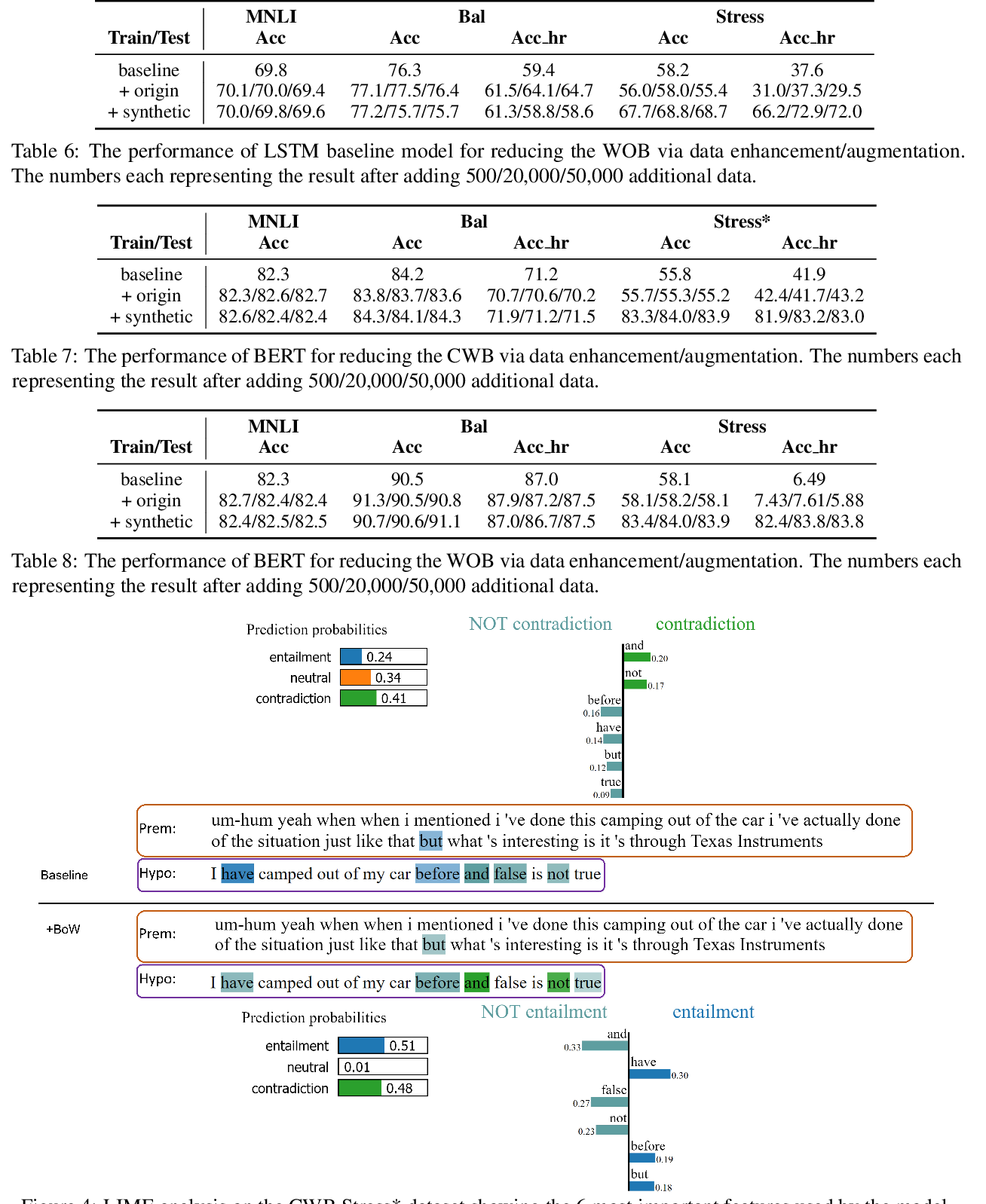

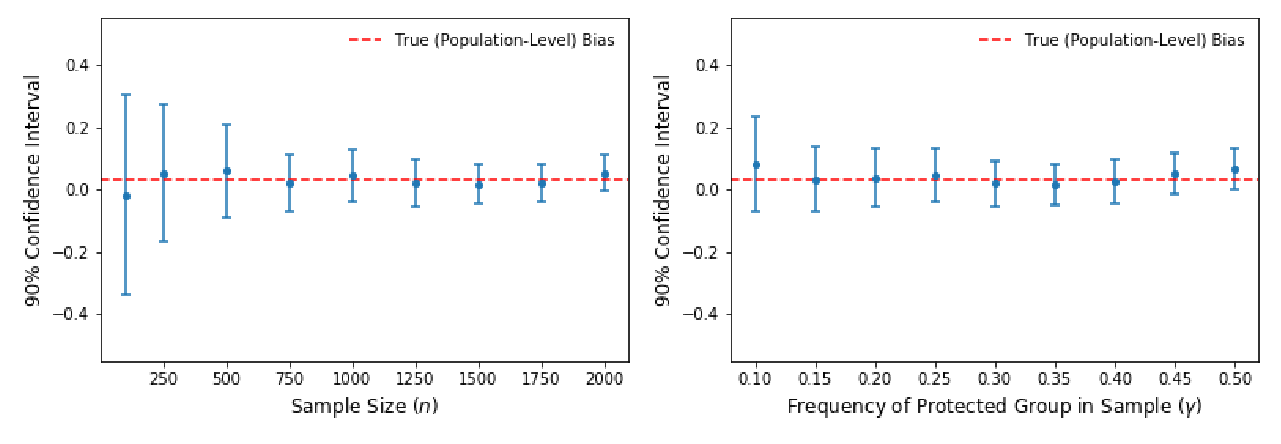

Advanced machine learning techniques have boosted the performance of natural language processing. Nevertheless, recent studies, e.g., show that these techniques inadvertently capture the societal bias hidden in the corpus and further amplify it. However, their analysis is conducted only on models' top predictions. In this paper, we investigate the gender bias amplification issue from the distribution perspective and demonstrate that the bias is amplified in the view of predicted probability distribution over labels. We further propose a bias mitigation approach based on posterior regularization. With little performance loss, our method can almost remove the bias amplification in the distribution. Our study sheds the light on understanding the bias amplification.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

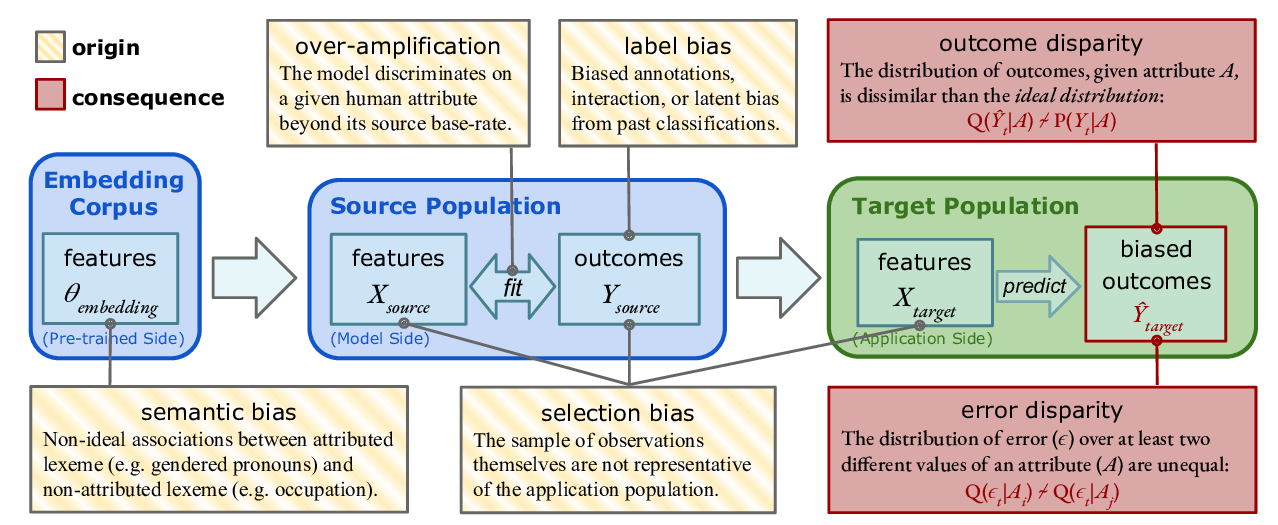

Predictive Biases in Natural Language Processing Models: A Conceptual Framework and Overview

Deven Santosh Shah, H. Andrew Schwartz, Dirk Hovy,

On Exposure Bias, Hallucination and Domain Shift in Neural Machine Translation

Chaojun Wang, Rico Sennrich,