Improving Transformer Models by Reordering their Sublayers

Ofir Press, Noah A. Smith, Omer Levy

Machine Learning for NLP Long Paper

Session 6A: Jul 7

(05:00-06:00 GMT)

Session 10B: Jul 7

(21:00-22:00 GMT)

Abstract:

Multilayer transformer networks consist of interleaved self-attention and feedforward sublayers. Could ordering the sublayers in a different pattern lead to better performance? We generate randomly ordered transformers and train them with the language modeling objective. We observe that some of these models are able to achieve better performance than the interleaved baseline, and that those successful variants tend to have more self-attention at the bottom and more feedforward sublayers at the top. We propose a new transformer pattern that adheres to this property, the sandwich transformer, and show that it improves perplexity on multiple word-level and character-level language modeling benchmarks, at no cost in parameters, memory, or training time. However, the sandwich reordering pattern does not guarantee performance gains across every task, as we demonstrate on machine translation models. Instead, we suggest that further exploration of task-specific sublayer reorderings is needed in order to unlock additional gains.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

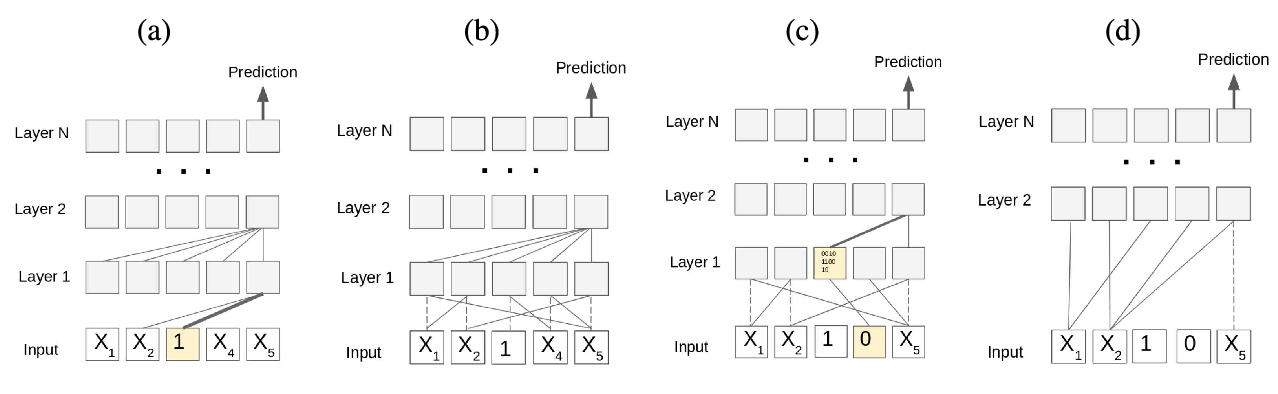

Character-Level Translation with Self-attention

Yingqiang Gao, Nikola I. Nikolov, Yuhuang Hu, Richard H.R. Hahnloser,

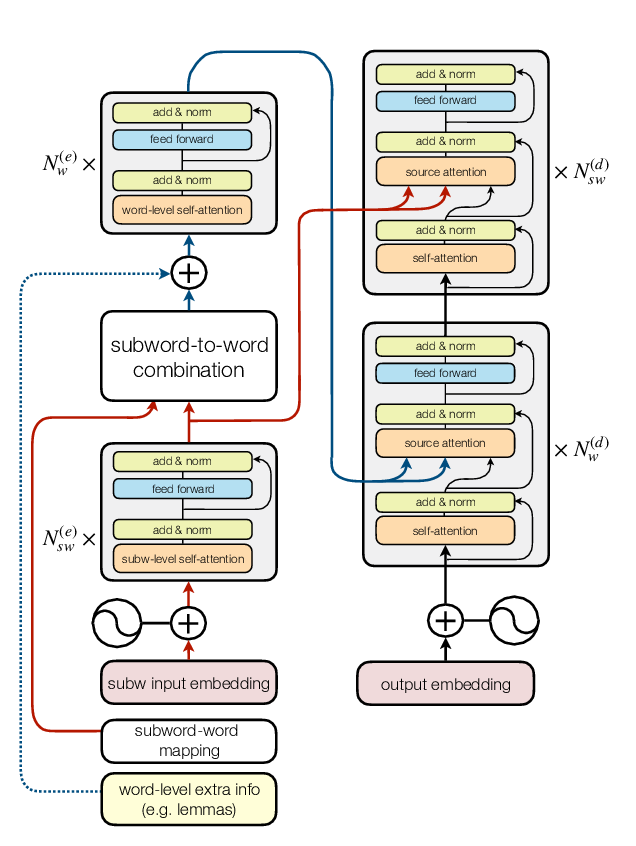

Combining Subword Representations into Word-level Representations in the Transformer Architecture

Noe Casas, Marta R. Costa-jussà, José A. R. Fonollosa,

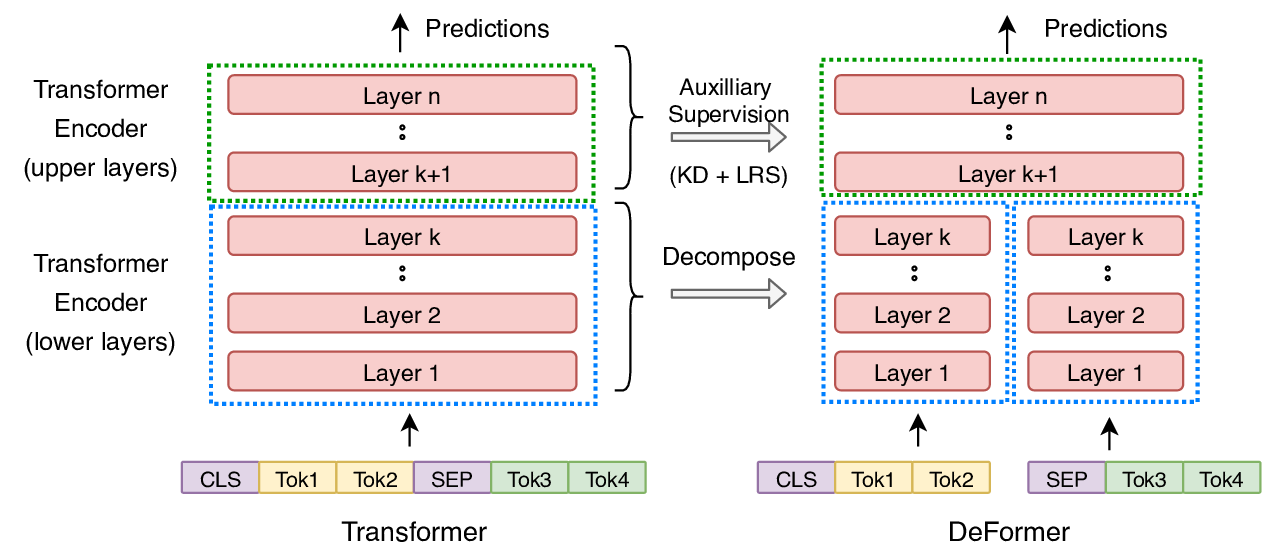

DeFormer: Decomposing Pre-trained Transformers for Faster Question Answering

Qingqing Cao, Harsh Trivedi, Aruna Balasubramanian, Niranjan Balasubramanian,