Towards Holistic and Automatic Evaluation of Open-Domain Dialogue Generation

Bo Pang, Erik Nijkamp, Wenjuan Han, Linqi Zhou, Yixian Liu, Kewei Tu

Resources and Evaluation Long Paper

Session 6B: Jul 7

(06:00-07:00 GMT)

Session 10A: Jul 7

(20:00-21:00 GMT)

Abstract:

Open-domain dialogue generation has gained increasing attention in Natural Language Processing. Its evaluation requires a holistic means. Human ratings are deemed as the gold standard. As human evaluation is inefficient and costly, an automated substitute is highly desirable. In this paper, we propose holistic evaluation metrics that capture different aspects of open-domain dialogues. Our metrics consist of (1) GPT-2 based context coherence between sentences in a dialogue, (2) GPT-2 based fluency in phrasing, (3) n-gram based diversity in responses to augmented queries, and (4) textual-entailment-inference based logical self-consistency. The empirical validity of our metrics is demonstrated by strong correlations with human judgments. We open source the code and relevant materials.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Designing Precise and Robust Dialogue Response Evaluators

Tianyu Zhao, Divesh Lala, Tatsuya Kawahara,

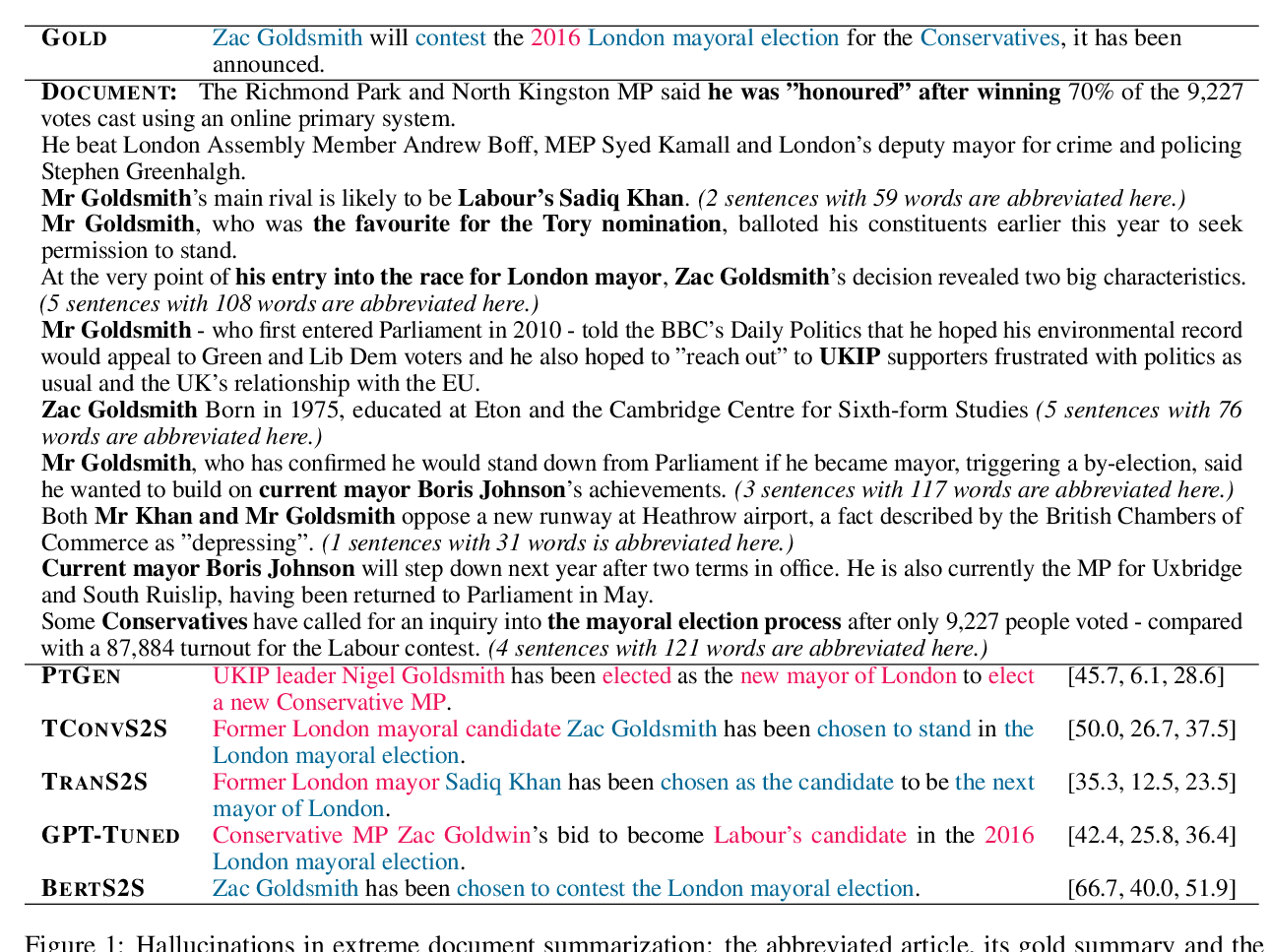

On Faithfulness and Factuality in Abstractive Summarization

Joshua Maynez, Shashi Narayan, Bernd Bohnet, Ryan McDonald,

Logical Natural Language Generation from Open-Domain Tables

Wenhu Chen, Jianshu Chen, Yu Su, Zhiyu Chen, William Yang Wang,