Autoencoding Pixies: Amortised Variational Inference with Graph Convolutions for Functional Distributional Semantics

Guy Emerson

Semantics: Lexical Long Paper

Session 7A: Jul 7

(08:00-09:00 GMT)

Session 8A: Jul 7

(12:00-13:00 GMT)

Abstract:

Functional Distributional Semantics provides a linguistically interpretable framework for distributional semantics, by representing the meaning of a word as a function (a binary classifier), instead of a vector. However, the large number of latent variables means that inference is computationally expensive, and training a model is therefore slow to converge. In this paper, I introduce the Pixie Autoencoder, which augments the generative model of Functional Distributional Semantics with a graph-convolutional neural network to perform amortised variational inference. This allows the model to be trained more effectively, achieving better results on two tasks (semantic similarity in context and semantic composition), and outperforming BERT, a large pre-trained language model.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

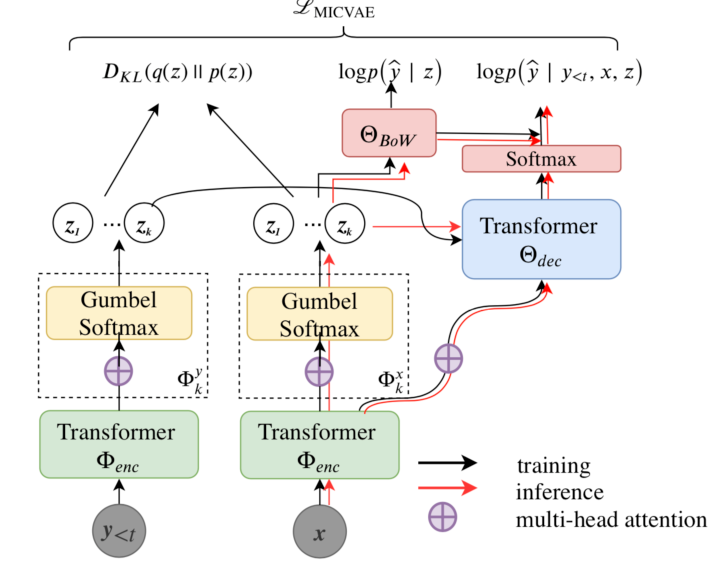

Variational Neural Machine Translation with Normalizing Flows

Hendra Setiawan, Matthias Sperber, Udhyakumar Nallasamy, Matthias Paulik,

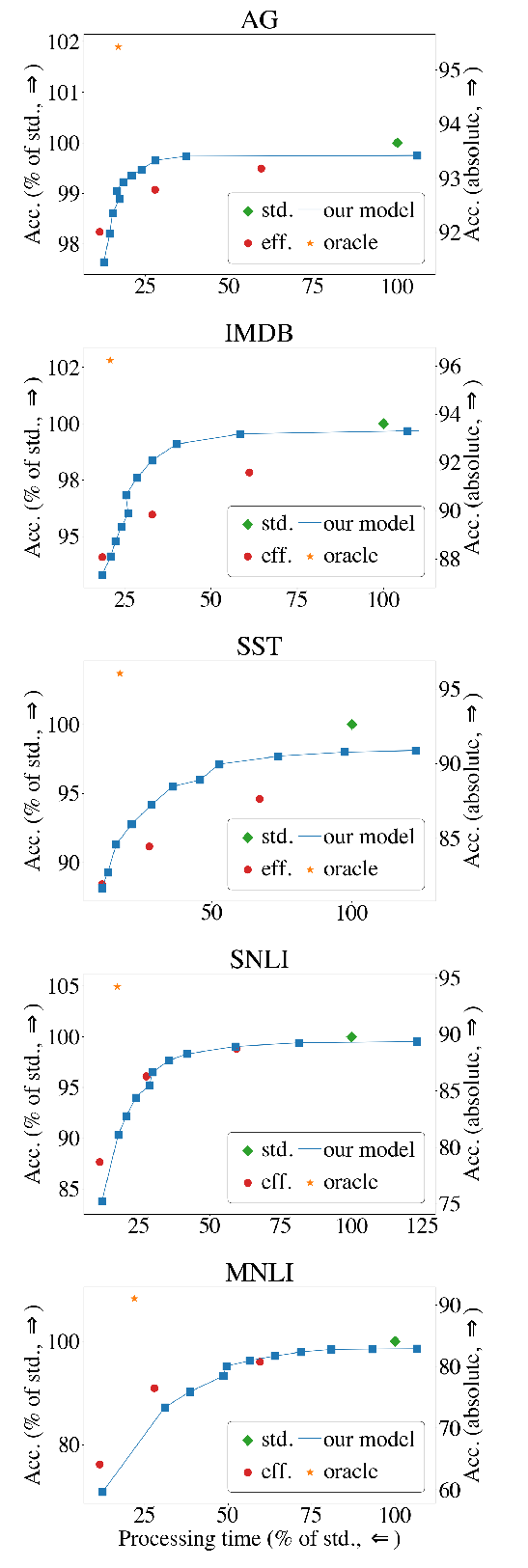

The Right Tool for the Job: Matching Model and Instance Complexities

Roy Schwartz, Gabriel Stanovsky, Swabha Swayamdipta, Jesse Dodge, Noah A. Smith,

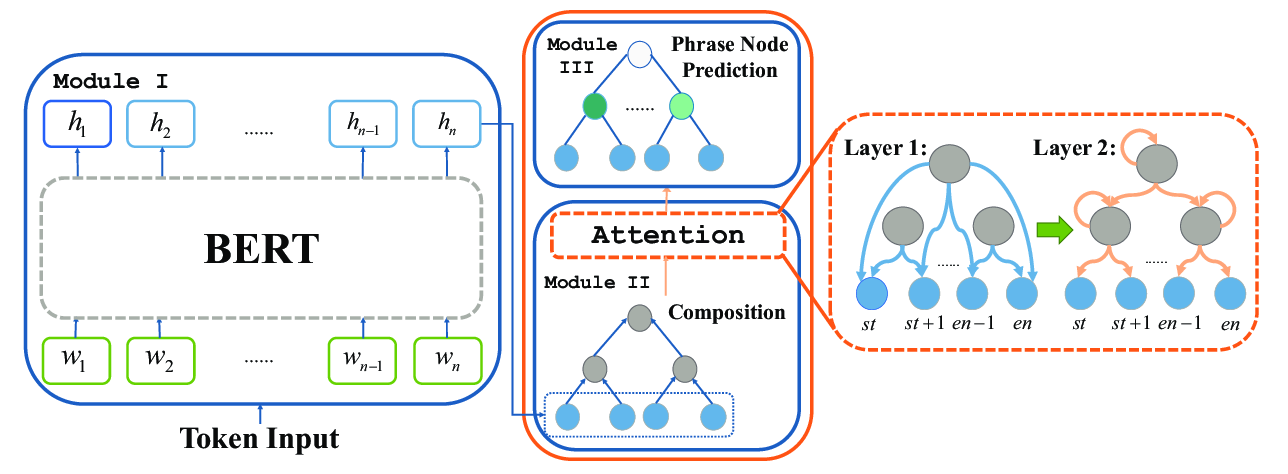

SentiBERT: A Transferable Transformer-Based Architecture for Compositional Sentiment Semantics

Da Yin, Tao Meng, Kai-Wei Chang,

Addressing Posterior Collapse with Mutual Information for Improved Variational Neural Machine Translation

Arya D. McCarthy, Xian Li, Jiatao Gu, Ning Dong,