AMR Parsing with Latent Structural Information

Qiji Zhou, Yue Zhang, Donghong Ji, Hao Tang

Semantics: Sentence Level Long Paper

Session 7B: Jul 7

(09:00-10:00 GMT)

Session 8A: Jul 7

(12:00-13:00 GMT)

Abstract:

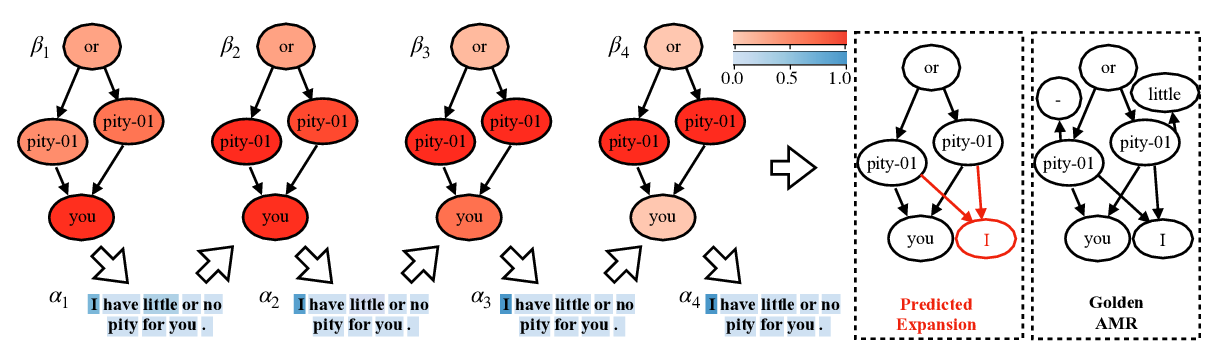

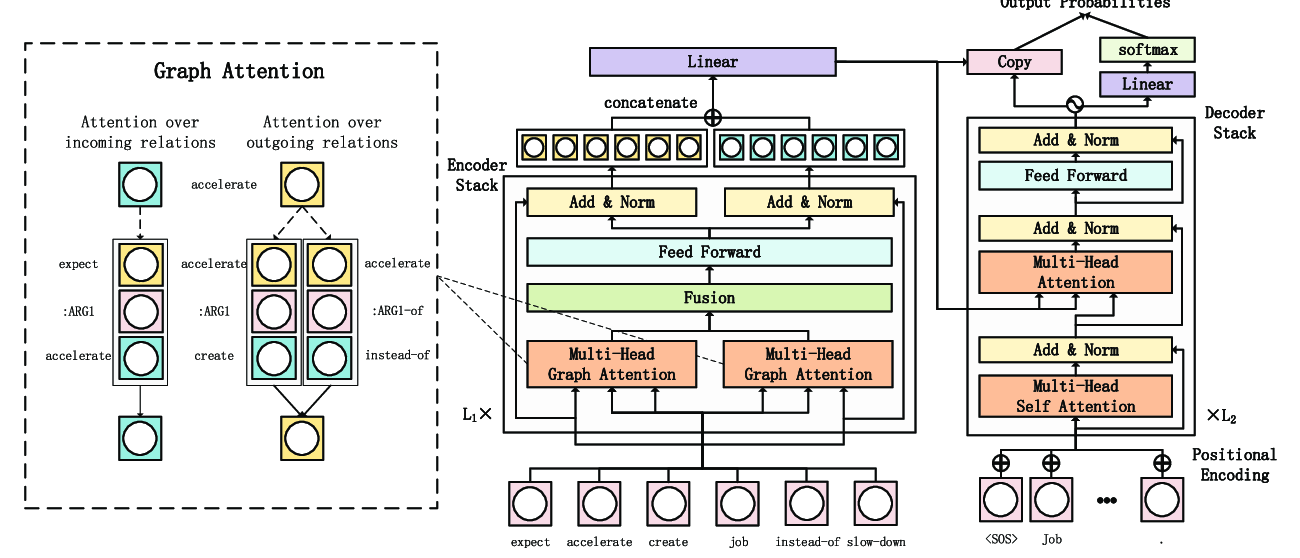

Abstract Meaning Representations (AMRs) capture sentence-level semantics structural representations to broad-coverage natural sentences. We investigate parsing AMR with explicit dependency structures and interpretable latent structures. We generate the latent soft structure without additional annotations, and fuse both dependency and latent structure via an extended graph neural networks. The fused structural information helps our experiments results to achieve the best reported results on both AMR 2.0 (77.5% Smatch F1 on LDC2017T10) and AMR 1.0 ((71.8% Smatch F1 on LDC2014T12).

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

GPT-too: A Language-Model-First Approach for AMR-to-Text Generation

Manuel Mager, Ramón Fernandez Astudillo, Tahira Naseem, Md Arafat Sultan, Young-Suk Lee, Radu Florian, Salim Roukos,

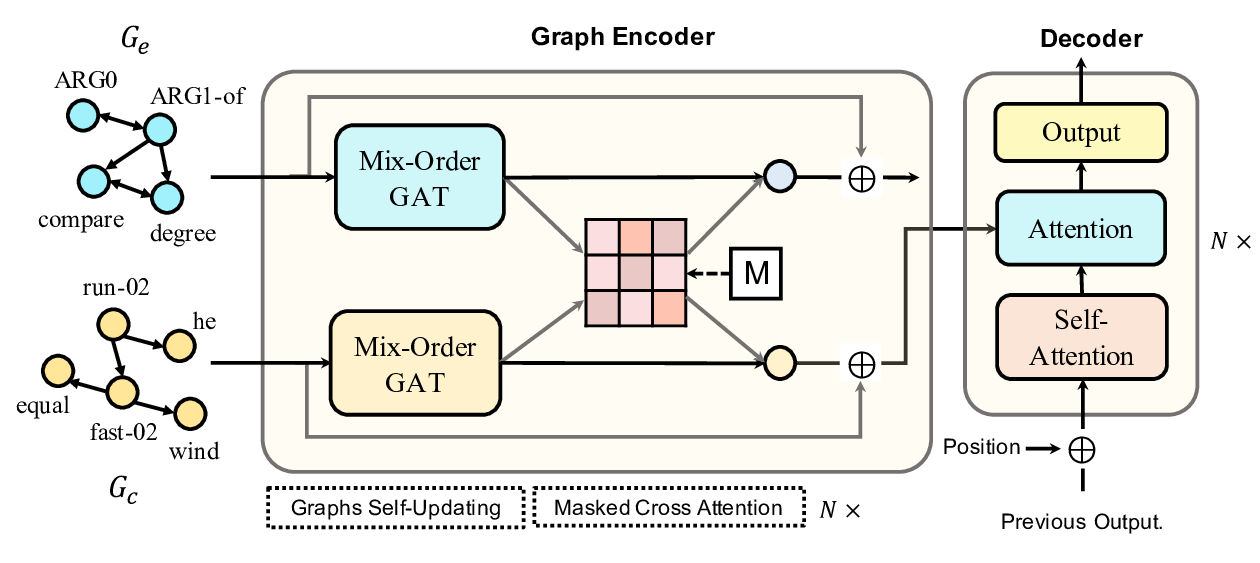

Line Graph Enhanced AMR-to-Text Generation with Mix-Order Graph Attention Networks

Yanbin Zhao, Lu Chen, Zhi Chen, Ruisheng Cao, Su Zhu, Kai Yu,