Template-Based Question Generation from Retrieved Sentences for Improved Unsupervised Question Answering

Alexander Fabbri, Patrick Ng, Zhiguo Wang, Ramesh Nallapati, Bing Xiang

Question Answering Short Paper

Session 8A: Jul 7

(12:00-13:00 GMT)

Session 9B: Jul 7

(18:00-19:00 GMT)

Abstract:

Question Answering (QA) is in increasing demand as the amount of information available online and the desire for quick access to this content grows. A common approach to QA has been to fine-tune a pretrained language model on a task-specific labeled dataset. This paradigm, however, relies on scarce, and costly to obtain, large-scale human-labeled data. We propose an unsupervised approach to training QA models with generated pseudo-training data. We show that generating questions for QA training by applying a simple template on a related, retrieved sentence rather than the original context sentence improves downstream QA performance by allowing the model to learn more complex context-question relationships. Training a QA model on this data gives a relative improvement over a previous unsupervised model in F1 score on the SQuAD dataset by about 14%, and 20% when the answer is a named entity, achieving state-of-the-art performance on SQuAD for unsupervised QA.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

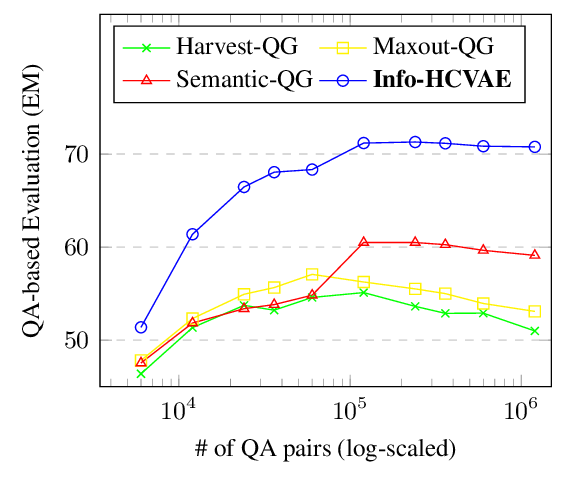

Generating Diverse and Consistent QA pairs from Contexts with Information-Maximizing Hierarchical Conditional VAEs

Dong Bok Lee, Seanie Lee, Woo Tae Jeong, Donghwan Kim, Sung Ju Hwang,

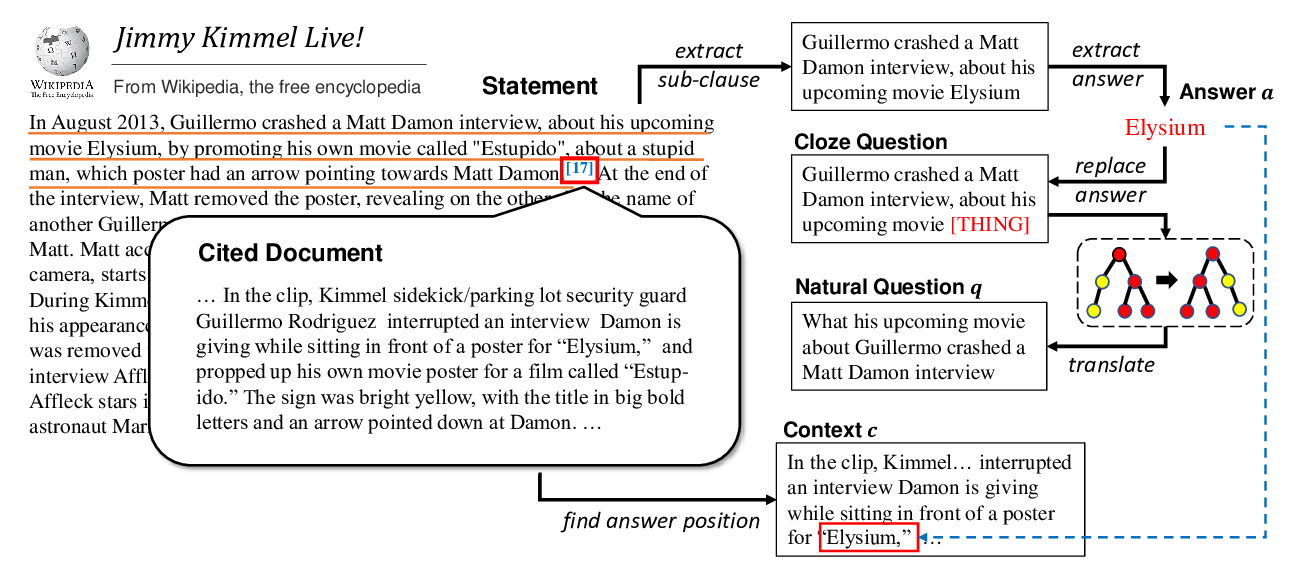

Harvesting and Refining Question-Answer Pairs for Unsupervised QA

Zhongli Li, Wenhui Wang, Li Dong, Furu Wei, Ke Xu,

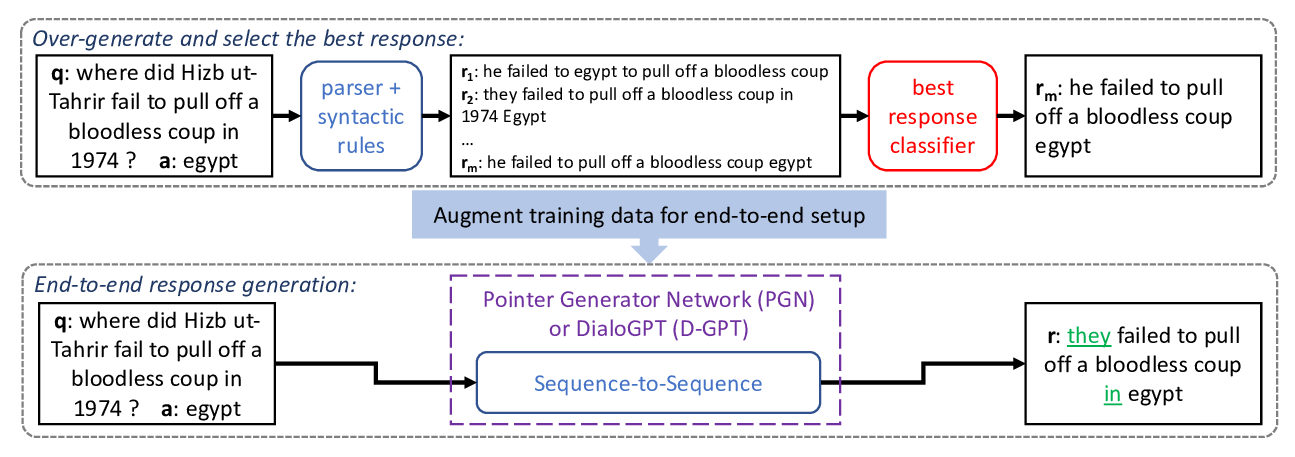

Fluent Response Generation for Conversational Question Answering

Ashutosh Baheti, Alan Ritter, Kevin Small,

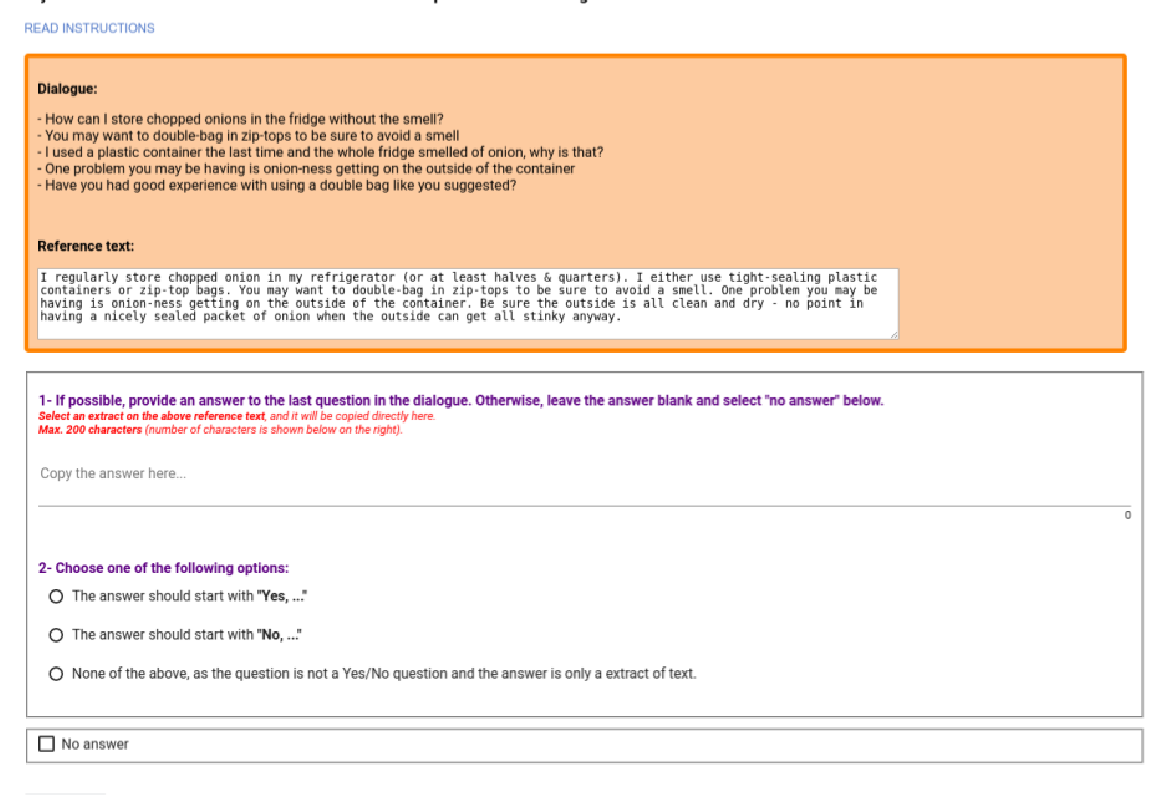

DoQA - Accessing Domain-Specific FAQs via Conversational QA

Jon Ander Campos, Arantxa Otegi, Aitor Soroa, Jan Deriu, Mark Cieliebak, Eneko Agirre,