Dscorer: A Fast Evaluation Metric for Discourse Representation Structure Parsing

Jiangming Liu, Shay B. Cohen, Mirella Lapata

Resources and Evaluation Short Paper

Session 8A: Jul 7

(12:00-13:00 GMT)

Session 10B: Jul 7

(21:00-22:00 GMT)

Abstract:

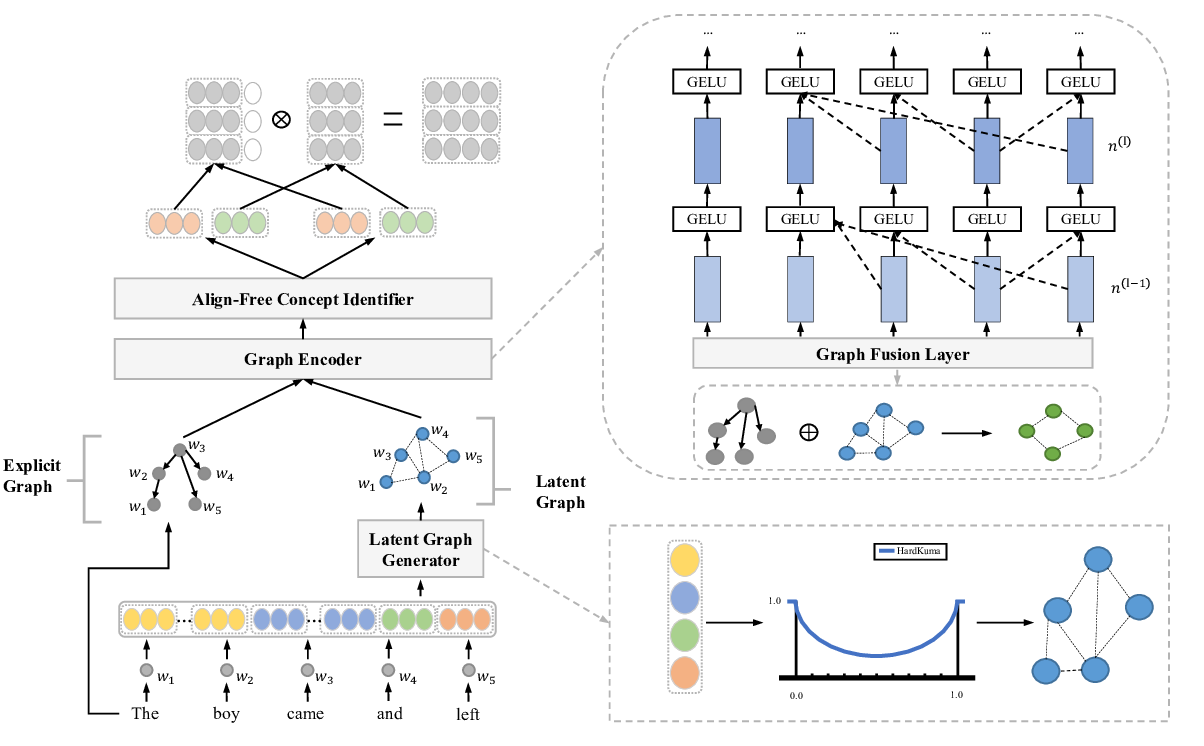

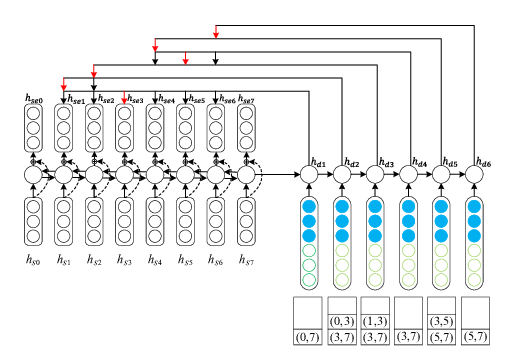

Discourse representation structures (DRSs) are scoped semantic representations for texts of arbitrary length. Evaluating the accuracy of predicted DRSs plays a key role in developing semantic parsers and improving their performance. DRSs are typically visualized as boxes which are not straightforward to process automatically. Counter transforms DRSs to clauses and measures clause overlap by searching for variable mappings between two DRSs. However, this metric is computationally costly (with respect to memory and CPU time) and does not scale with longer texts. We introduce Dscorer, an efficient new metric which converts box-style DRSs to graphs and then measures the overlap of n-grams. Experiments show that Dscorer computes accuracy scores that are correlated with Counter at a fraction of the time.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

A Top-down Neural Architecture towards Text-level Parsing of Discourse Rhetorical Structure

Longyin Zhang, Yuqing Xing, Fang Kong, Peifeng Li, Guodong Zhou,

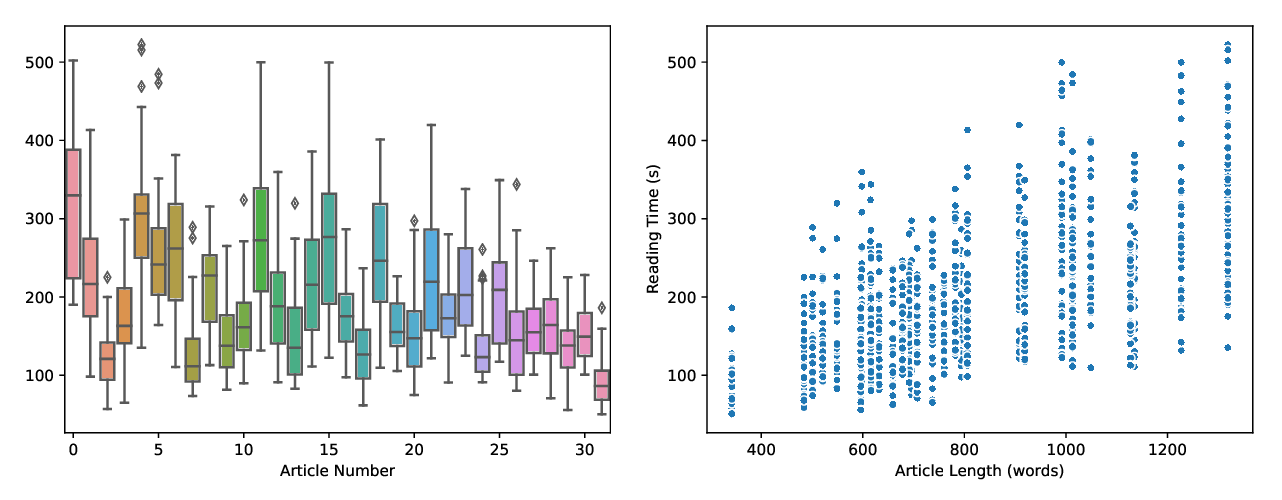

You Don't Have Time to Read This: An Exploration of Document Reading Time Prediction

Orion Weller, Jordan Hildebrandt, Ilya Reznik, Christopher Challis, E. Shannon Tass, Quinn Snell, Kevin Seppi,

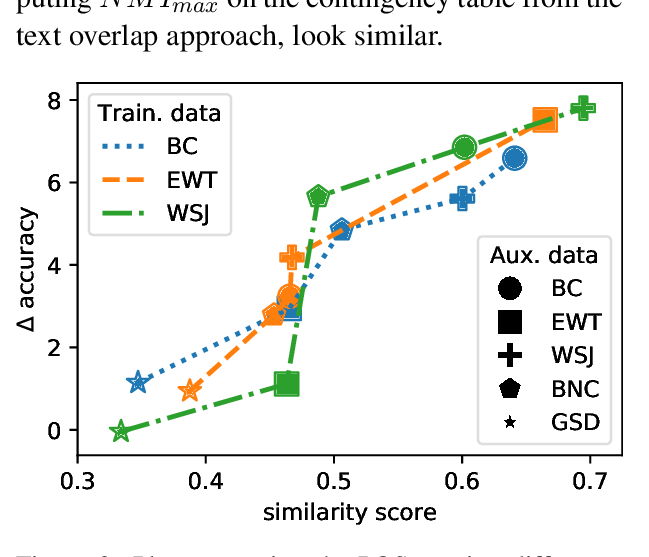

Estimating the influence of auxiliary tasks for multi-task learning of sequence tagging tasks

Fynn Schröder, Chris Biemann,