Learning Constraints for Structured Prediction Using Rectifier Networks

Xingyuan Pan, Maitrey Mehta, Vivek Srikumar

Machine Learning for NLP Long Paper

Session 9A: Jul 7

(17:00-18:00 GMT)

Session 10B: Jul 7

(21:00-22:00 GMT)

Abstract:

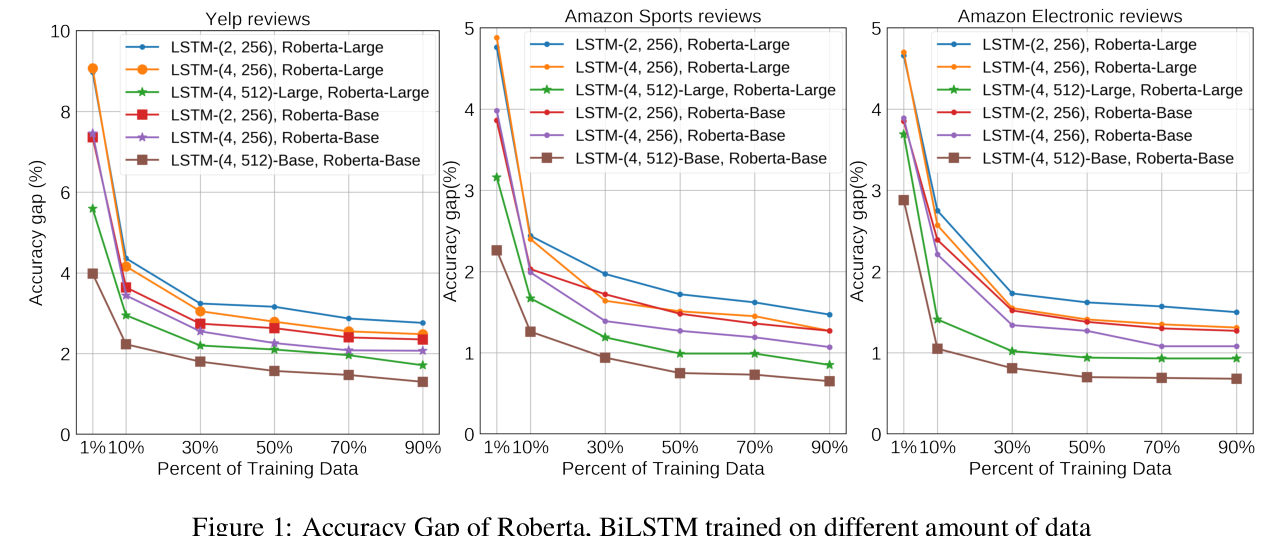

Various natural language processing tasks are structured prediction problems where outputs are constructed with multiple interdependent decisions. Past work has shown that domain knowledge, framed as constraints over the output space, can help improve predictive accuracy. However, designing good constraints often relies on domain expertise. In this paper, we study the problem of learning such constraints. We frame the problem as that of training a two-layer rectifier network to identify valid structures or substructures, and show a construction for converting a trained network into a system of linear constraints over the inference variables. Our experiments on several NLP tasks show that the learned constraints can improve the prediction accuracy, especially when the number of training examples is small.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

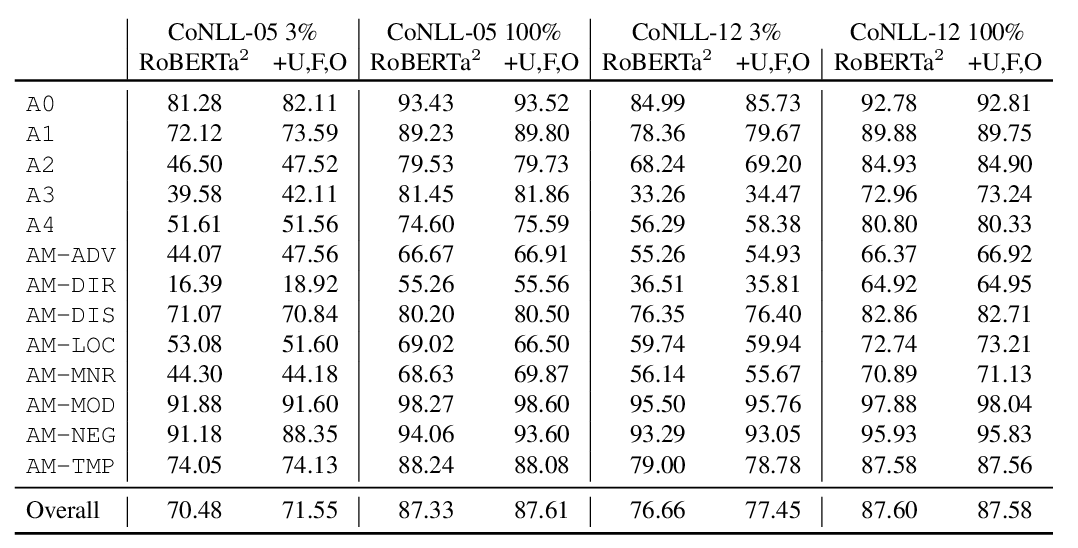

Structured Tuning for Semantic Role Labeling

Tao Li, Parth Anand Jawale, Martha Palmer, Vivek Srikumar,

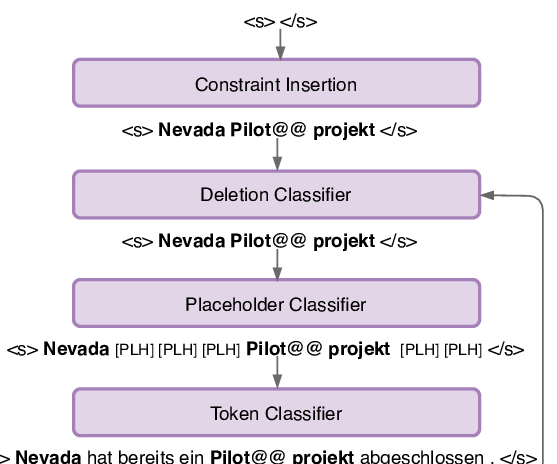

Lexically Constrained Neural Machine Translation with Levenshtein Transformer

Raymond Hendy Susanto, Shamil Chollampatt, Liling Tan,

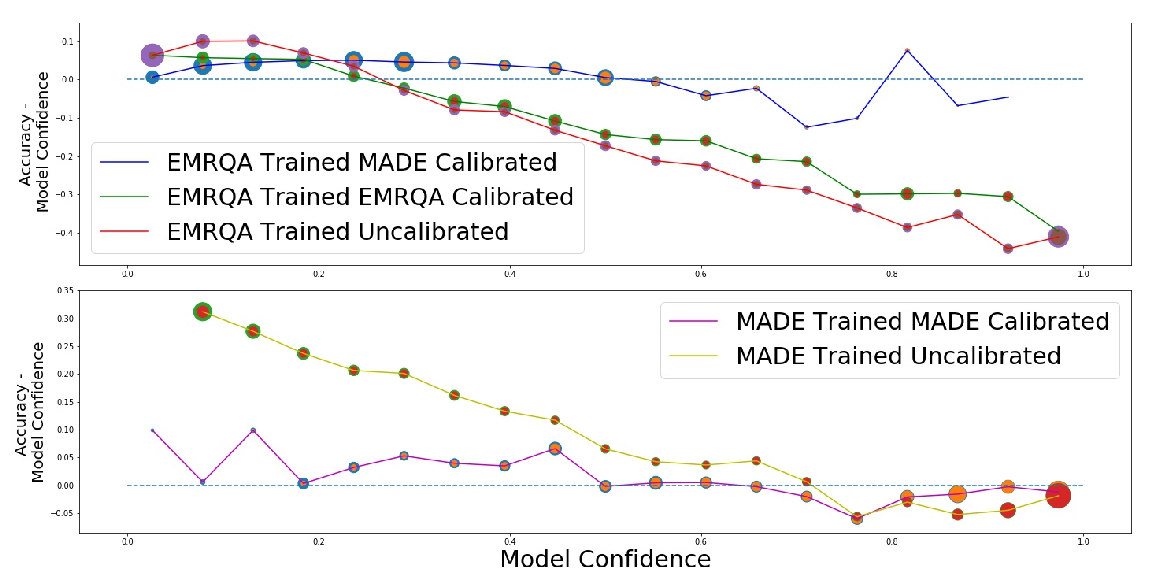

Calibrating Structured Output Predictors for Natural Language Processing

Abhyuday Jagannatha, Hong Yu,

To Pretrain or Not to Pretrain: Examining the Benefits of Pretrainng on Resource Rich Tasks

Sinong Wang, Madian Khabsa, Hao Ma,