Simplify the Usage of Lexicon in Chinese NER

Ruotian Ma, Minlong Peng, Qi Zhang, Zhongyu Wei, Xuanjing Huang

Information Extraction Long Paper

Session 11A: Jul 8

(05:00-06:00 GMT)

Session 12A: Jul 8

(08:00-09:00 GMT)

Abstract:

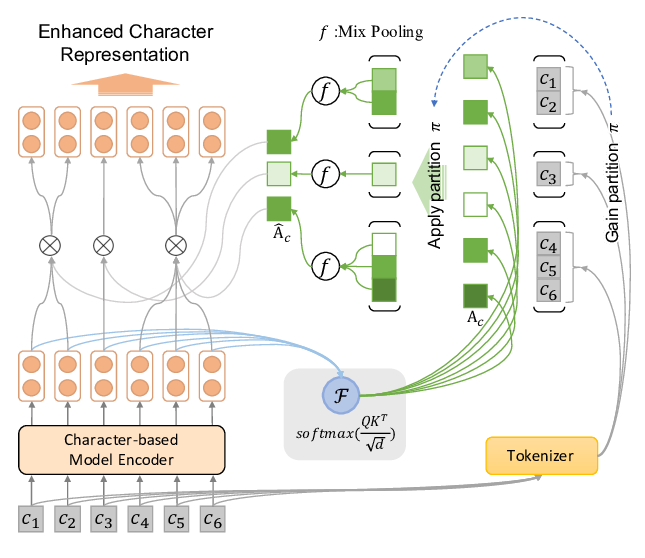

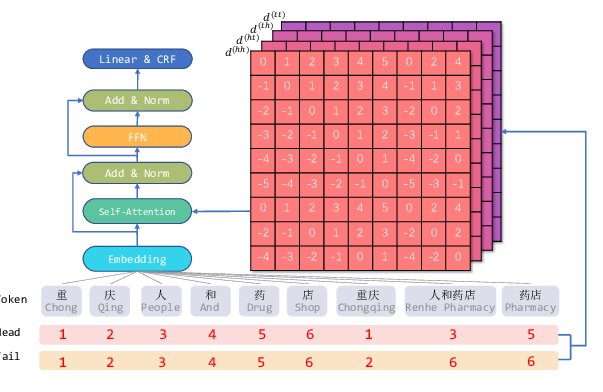

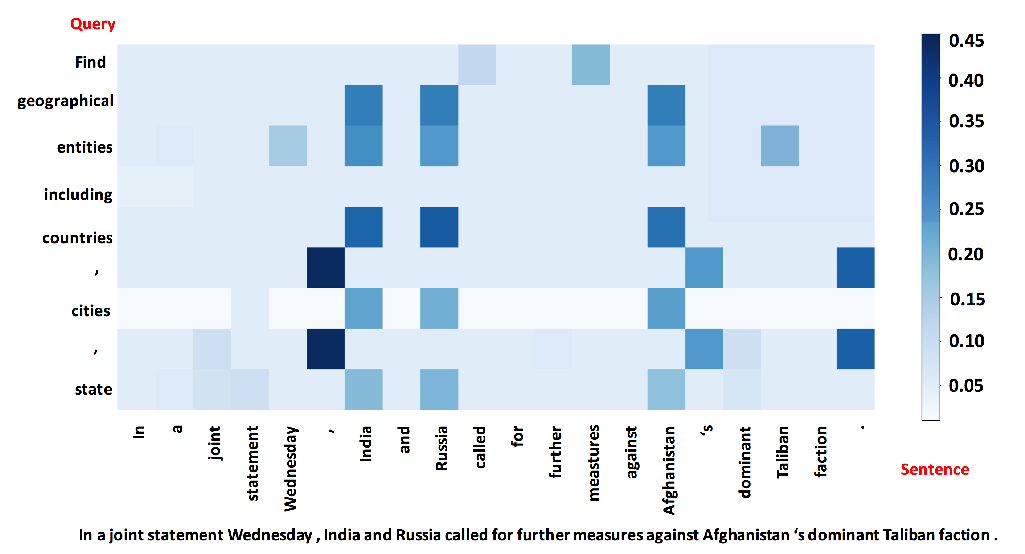

Recently, many works have tried to augment the performance of Chinese named entity recognition (NER) using word lexicons. As a representative, Lattice-LSTM has achieved new benchmark results on several public Chinese NER datasets. However, Lattice-LSTM has a complex model architecture. This limits its application in many industrial areas where real-time NER responses are needed. In this work, we propose a simple but effective method for incorporating the word lexicon into the character representations. This method avoids designing a complicated sequence modeling architecture, and for any neural NER model, it requires only subtle adjustment of the character representation layer to introduce the lexicon information. Experimental studies on four benchmark Chinese NER datasets show that our method achieves an inference speed up to 6.15 times faster than those of state-of-the-art methods, along with a better performance. The experimental results also show that the proposed method can be easily incorporated with pre-trained models like BERT.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

A Unified MRC Framework for Named Entity Recognition

Xiaoya Li, Jingrong Feng, Yuxian Meng, Qinghong Han, Fei Wu, Jiwei Li,

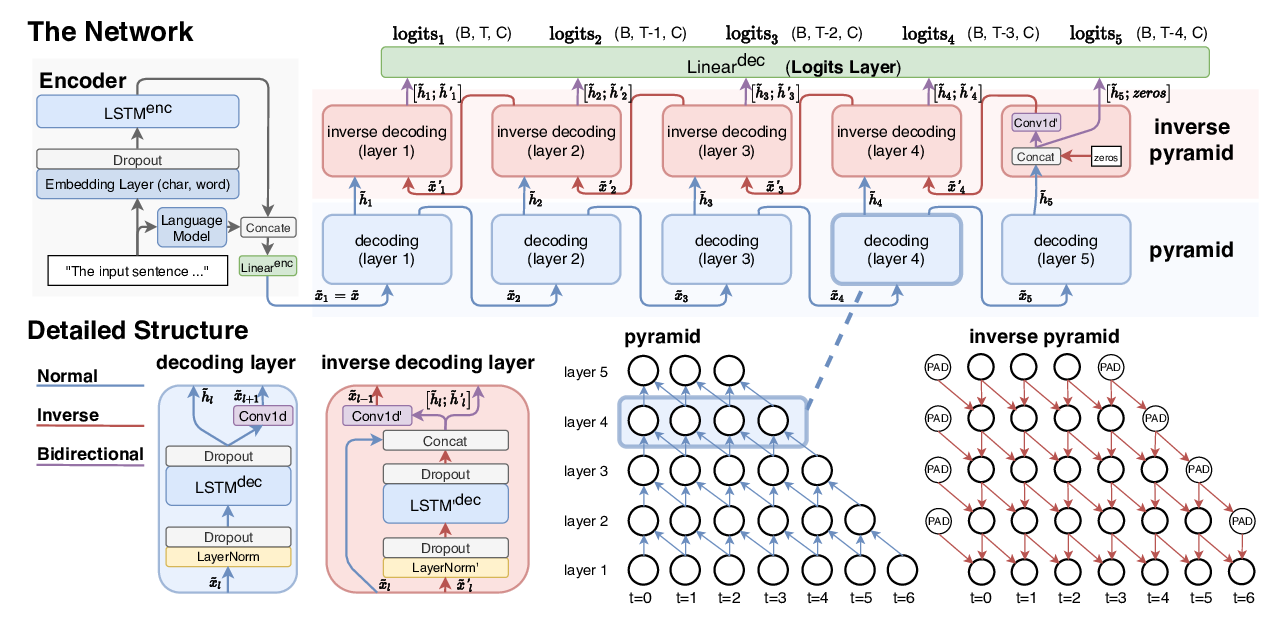

Pyramid: A Layered Model for Nested Named Entity Recognition

Jue Wang, Lidan Shou, Ke Chen, Gang Chen,

Enhancing Pre-trained Chinese Character Representation with Word-aligned Attention

Yanzeng Li, Bowen Yu, Xue Mengge, Tingwen Liu,