Leveraging Graph to Improve Abstractive Multi-Document Summarization

Wei Li, Xinyan Xiao, Jiachen Liu, Hua Wu, Haifeng Wang, Junping Du

Summarization Long Paper

Session 11A: Jul 8

(05:00-06:00 GMT)

Session 12A: Jul 8

(08:00-09:00 GMT)

Abstract:

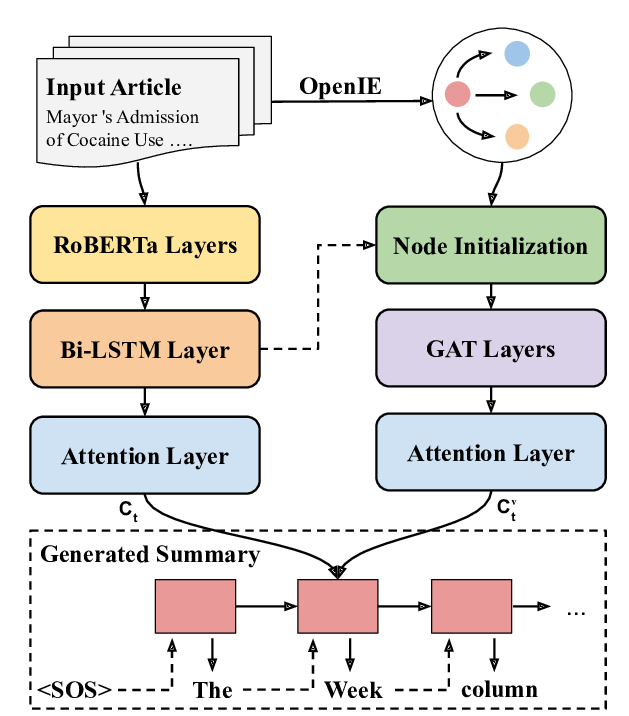

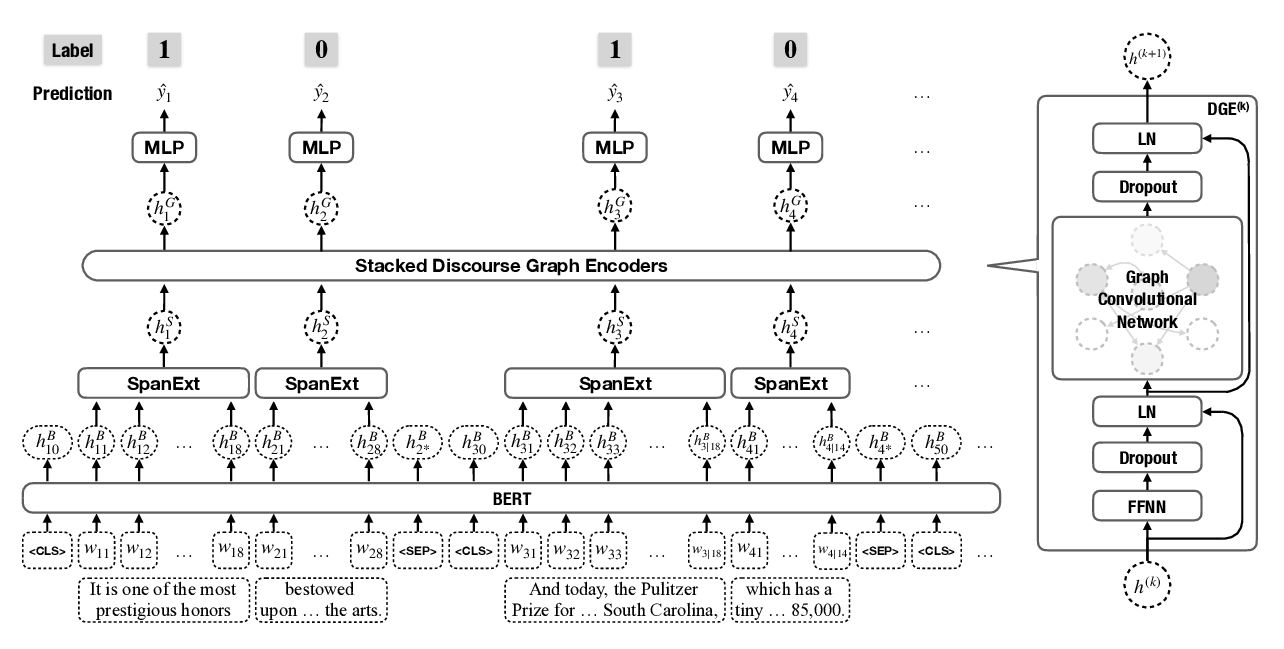

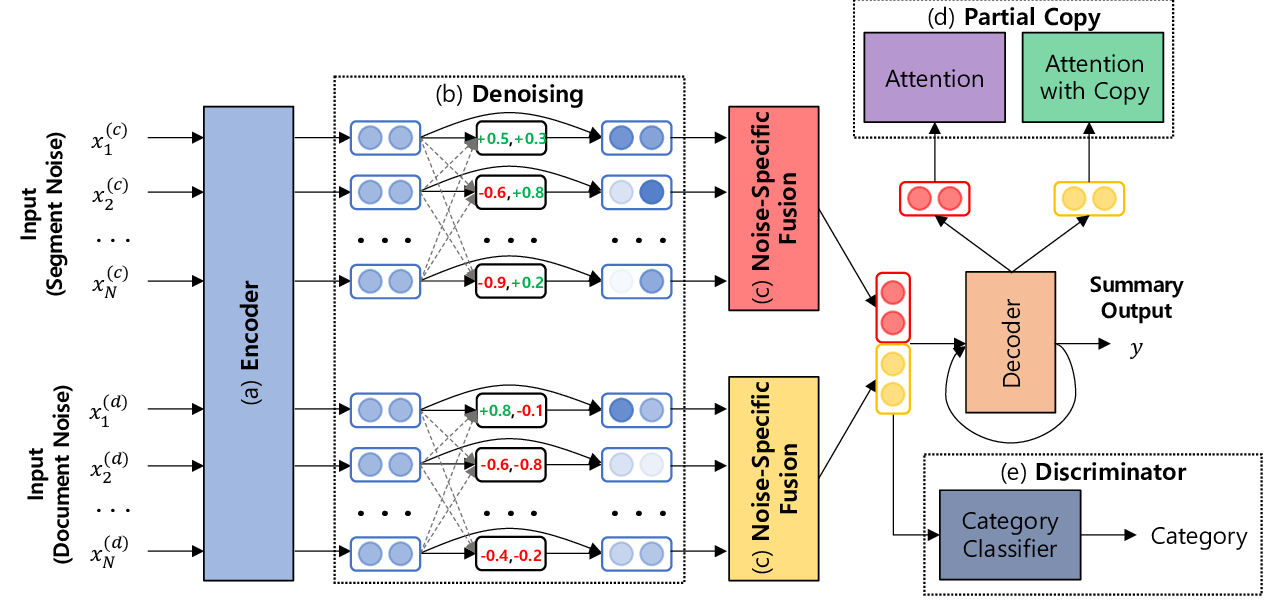

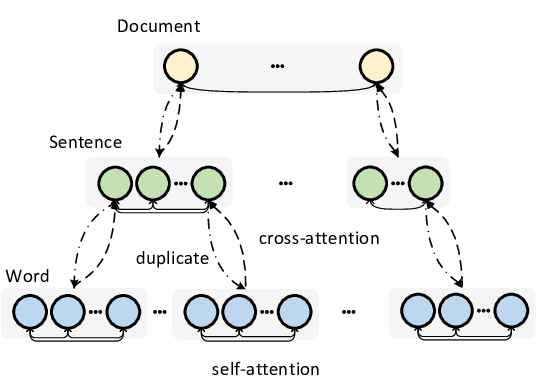

Graphs that capture relations between textual units have great benefits for detecting salient information from multiple documents and generating overall coherent summaries. In this paper, we develop a neural abstractive multi-document summarization (MDS) model which can leverage well-known graph representations of documents such as similarity graph and discourse graph, to more effectively process multiple input documents and produce abstractive summaries. Our model utilizes graphs to encode documents in order to capture cross-document relations, which is crucial to summarizing long documents. Our model can also take advantage of graphs to guide the summary generation process, which is beneficial for generating coherent and concise summaries. Furthermore, pre-trained language models can be easily combined with our model, which further improve the summarization performance significantly. Empirical results on the WikiSum and MultiNews dataset show that the proposed architecture brings substantial improvements over several strong baselines.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Multi-Granularity Interaction Network for Extractive and Abstractive Multi-Document Summarization

Hanqi Jin, Tianming Wang, Xiaojun Wan,

Knowledge Graph-Augmented Abstractive Summarization with Semantic-Driven Cloze Reward

Luyang Huang, Lingfei Wu, Lu Wang,