Dynamic Fusion Network for Multi-Domain End-to-end Task-Oriented Dialog

Libo Qin, Xiao Xu, Wanxiang Che, Yue Zhang, Ting Liu

Dialogue and Interactive Systems Long Paper

Session 11B: Jul 8

(06:00-07:00 GMT)

Session 13B: Jul 8

(13:00-14:00 GMT)

Abstract:

Recent studies have shown remarkable success in end-to-end task-oriented dialog system. However, most neural models rely on large training data, which are only available for a certain number of task domains, such as navigation and scheduling. This makes it difficult to scalable for a new domain with limited labeled data. However, there has been relatively little research on how to effectively use data from all domains to improve the performance of each domain and also unseen domains. To this end, we investigate methods that can make explicit use of domain knowledge and introduce a shared-private network to learn shared and specific knowledge. In addition, we propose a novel Dynamic Fusion Network (DF-Net) which automatically exploit the relevance between the target domain and each domain. Results show that our models outperforms existing methods on multi-domain dialogue, giving the state-of-the-art in the literature. Besides, with little training data, we show its transferability by outperforming prior best model by 13.9% on average.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

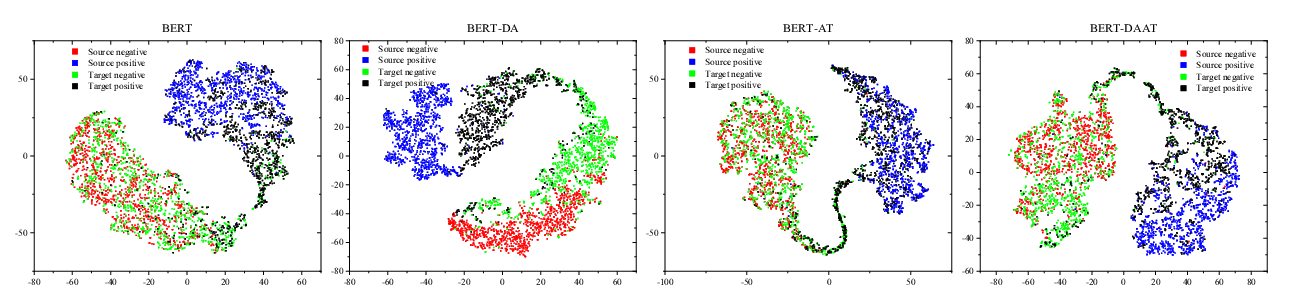

Adversarial and Domain-Aware BERT for Cross-Domain Sentiment Analysis

Chunning Du, Haifeng Sun, Jingyu Wang, Qi Qi, Jianxin Liao,

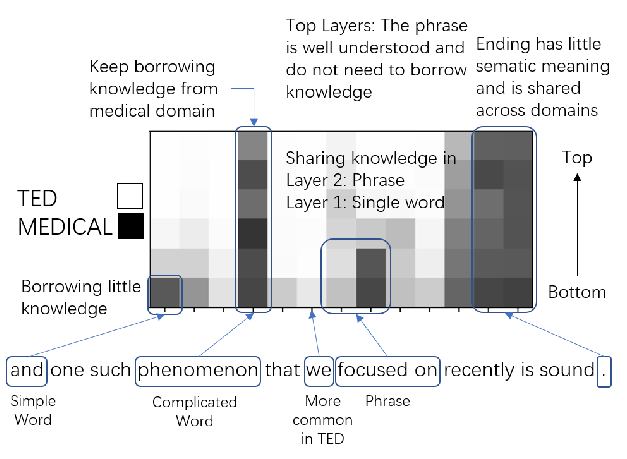

Multi-Domain Neural Machine Translation with Word-Level Adaptive Layer-wise Domain Mixing

Haoming Jiang, Chen Liang, Chong Wang, Tuo Zhao,

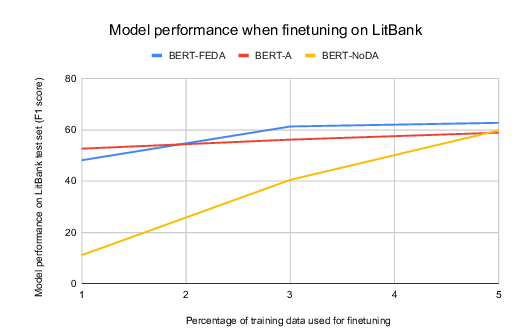

Towards Open Domain Event Trigger Identification using Adversarial Domain Adaptation

Aakanksha Naik, Carolyn Rose,

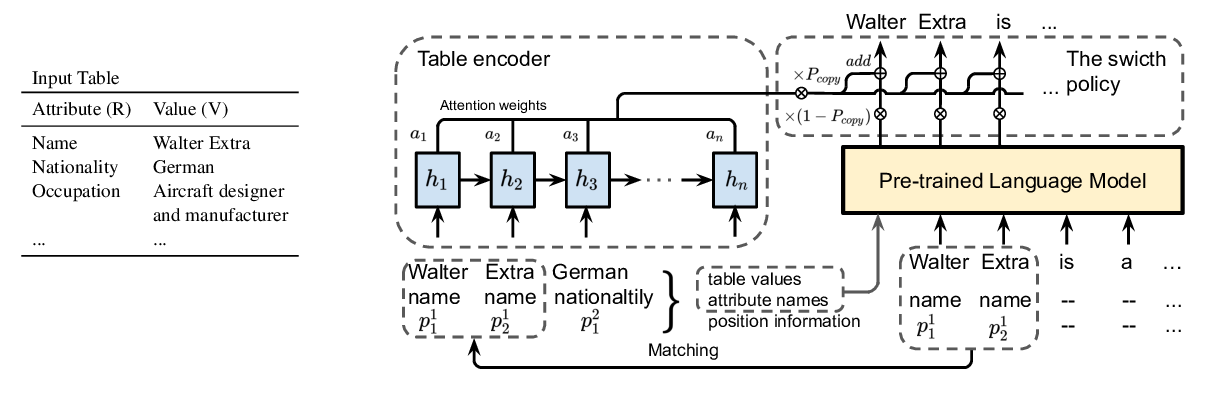

Few-Shot NLG with Pre-Trained Language Model

Zhiyu Chen, Harini Eavani, Wenhu Chen, Yinyin Liu, William Yang Wang,