Coupling Distant Annotation and Adversarial Training for Cross-Domain Chinese Word Segmentation

Ning Ding, Dingkun Long, Guangwei Xu, Muhua Zhu, Pengjun Xie, Xiaobin Wang, Haitao Zheng

Phonology, Morphology and Word Segmentation Long Paper

Session 11B: Jul 8

(06:00-07:00 GMT)

Session 12B: Jul 8

(09:00-10:00 GMT)

Abstract:

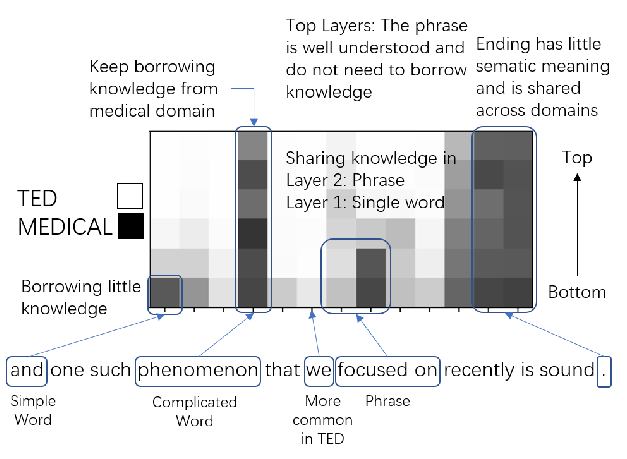

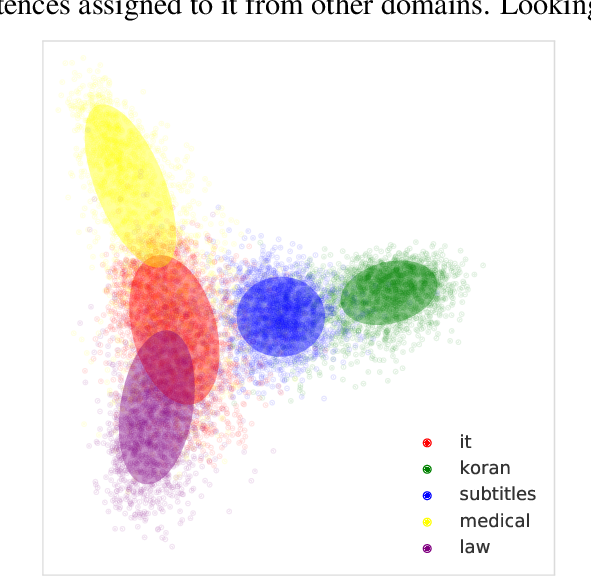

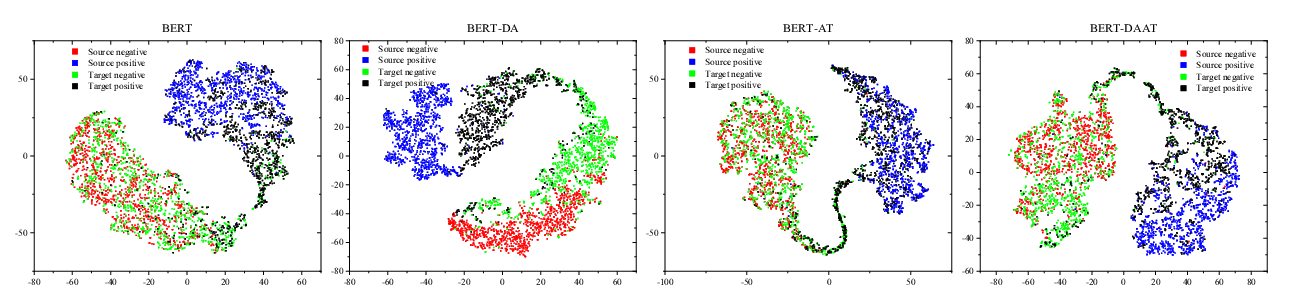

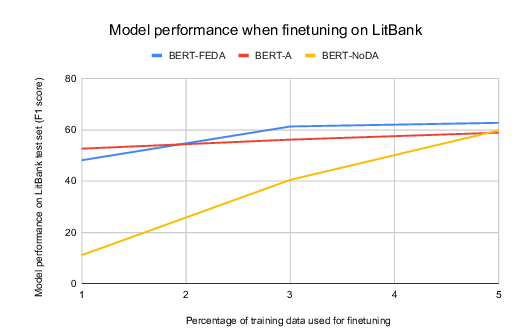

Fully supervised neural approaches have achieved significant progress in the task of Chinese word segmentation (CWS). Nevertheless, the performance of supervised models always drops gravely if the domain shifts due to the distribution gap across domains and the out of vocabulary (OOV) problem. In order to simultaneously alleviate the issues, this paper intuitively couples distant annotation and adversarial training for cross-domain CWS. 1) We rethink the essence of ``Chinese words'' and design an automatic distant annotation mechanism, which does not need any supervision or pre-defined dictionaries on the target domain. The method could effectively explore domain-specific words and distantly annotate the raw texts for the target domain. 2) We further develop a sentence-level adversarial training procedure to perform noise reduction and maximum utilization of the source domain information. Experiments on multiple real-world datasets across various domains show the superiority and robustness of our model, significantly outperforming previous state-of-the-arts cross-domain CWS methods.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Adversarial and Domain-Aware BERT for Cross-Domain Sentiment Analysis

Chunning Du, Haifeng Sun, Jingyu Wang, Qi Qi, Jianxin Liao,

Towards Open Domain Event Trigger Identification using Adversarial Domain Adaptation

Aakanksha Naik, Carolyn Rose,

Multi-Domain Neural Machine Translation with Word-Level Adaptive Layer-wise Domain Mixing

Haoming Jiang, Chen Liang, Chong Wang, Tuo Zhao,