Negated and Misprimed Probes for Pretrained Language Models: Birds Can Talk, But Cannot Fly

Nora Kassner, Hinrich Schütze

Theme Short Paper

Session 13B: Jul 8

(13:00-14:00 GMT)

Session 15B: Jul 8

(21:00-22:00 GMT)

Abstract:

Building on Petroni et al. 2019, we propose two new probing tasks analyzing factual knowledge stored in Pretrained Language Models (PLMs). (1) Negation. We find that PLMs do not distinguish between negated (``Birds cannot [MASK]'') and non-negated (``Birds can [MASK]'') cloze questions. (2) Mispriming. Inspired by priming methods in human psychology, we add ``misprimes'' to cloze questions (``Talk? Birds can [MASK]''). We find that PLMs are easily distracted by misprimes. These results suggest that PLMs still have a long way to go to adequately learn human-like factual knowledge.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data

Emily M. Bender, Alexander Koller,

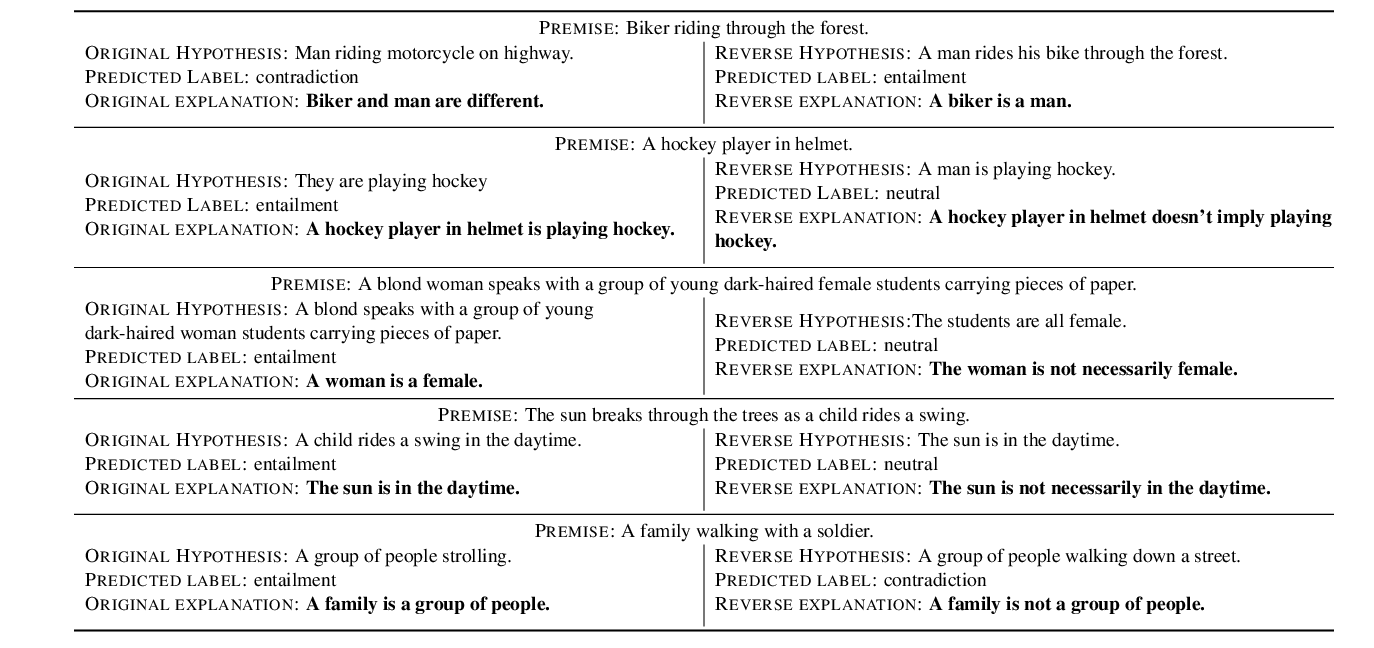

Make Up Your Mind! Adversarial Generation of Inconsistent Natural Language Explanations

Oana-Maria Camburu, Brendan Shillingford, Pasquale Minervini, Thomas Lukasiewicz, Phil Blunsom,

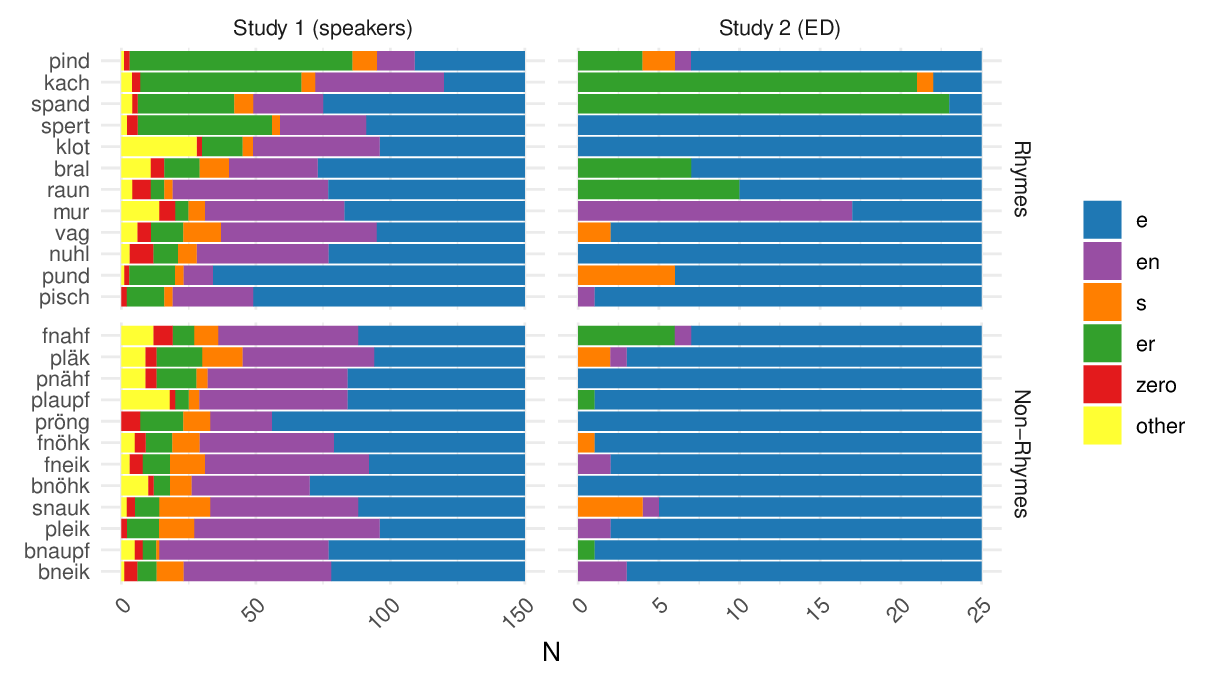

Inflecting When There's No Majority: Limitations of Encoder-Decoder Neural Networks as Cognitive Models for German Plurals

Kate McCurdy, Sharon Goldwater, Adam Lopez,

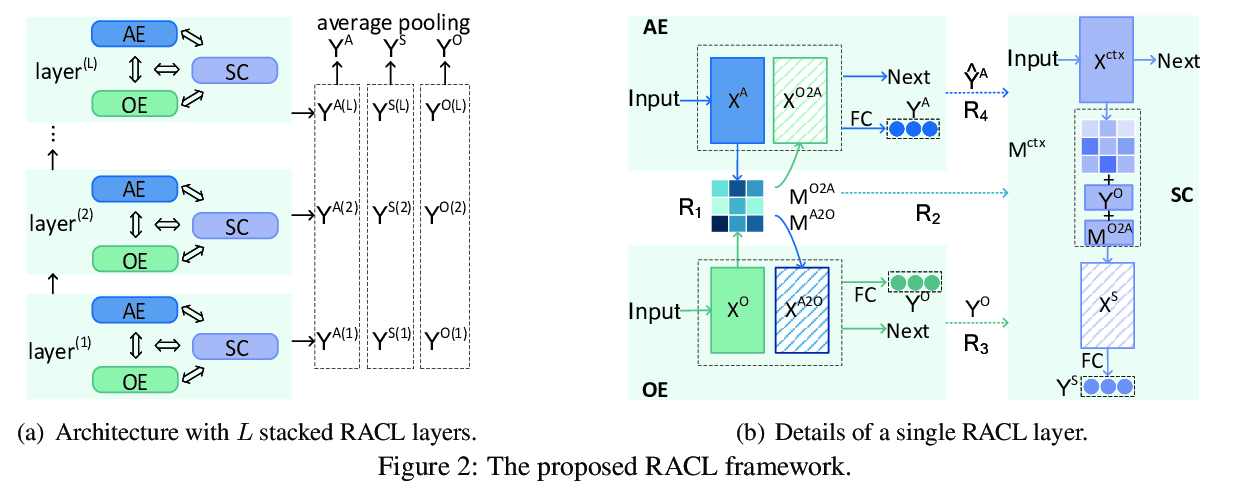

Relation-Aware Collaborative Learning for Unified Aspect-Based Sentiment Analysis

Zhuang Chen, Tieyun Qian,