Document-Level Event Role Filler Extraction using Multi-Granularity Contextualized Encoding

Xinya Du, Claire Cardie

Information Extraction Long Paper

Session 14A: Jul 8

(17:00-18:00 GMT)

Session 15A: Jul 8

(20:00-21:00 GMT)

Abstract:

Few works in the literature of event extraction have gone beyond individual sentences to make extraction decisions. This is problematic when the information needed to recognize an event argument is spread across multiple sentences. We argue that document-level event extraction is a difficult task since it requires a view of a larger context to determine which spans of text correspond to event role fillers. We first investigate how end-to-end neural sequence models (with pre-trained language model representations) perform on document-level role filler extraction, as well as how the length of context captured affects the models’ performance. To dynamically aggregate information captured by neural representations learned at different levels of granularity (e.g., the sentence- and paragraph-level), we propose a novel multi-granularity reader. We evaluate our models on the MUC-4 event extraction dataset, and show that our best system performs substantially better than prior work. We also report findings on the relationship between context length and neural model performance on the task.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Multi-Sentence Argument Linking

Seth Ebner, Patrick Xia, Ryan Culkin, Kyle Rawlins, Benjamin Van Durme,

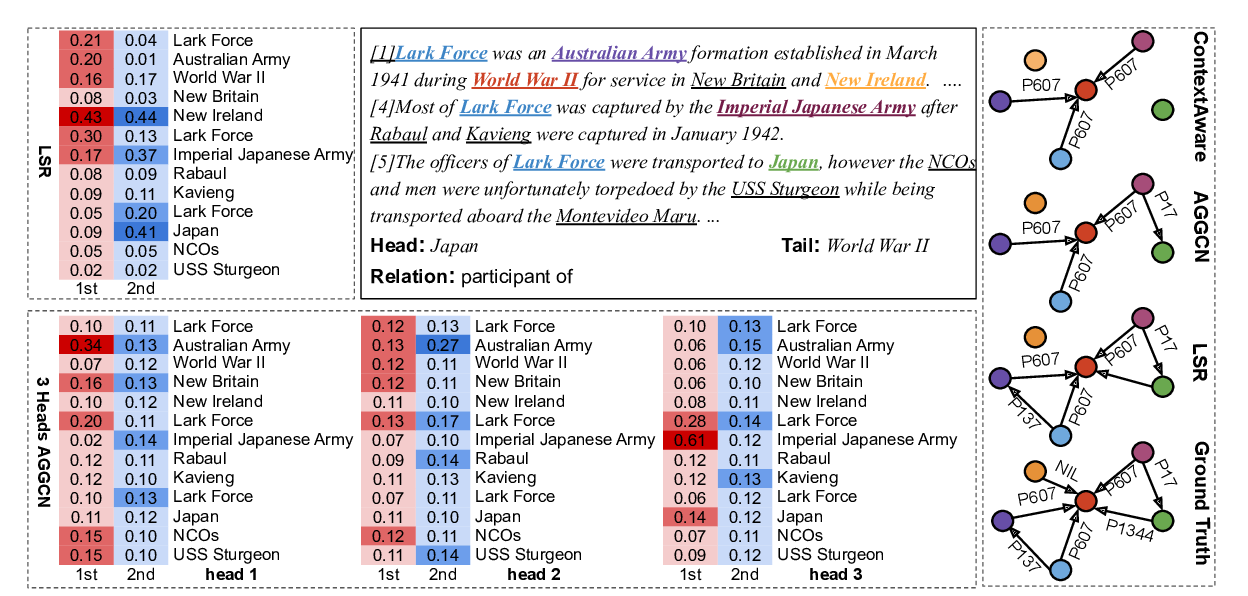

Reasoning with Latent Structure Refinement for Document-Level Relation Extraction

Guoshun Nan, Zhijiang Guo, Ivan Sekulic, Wei Lu,

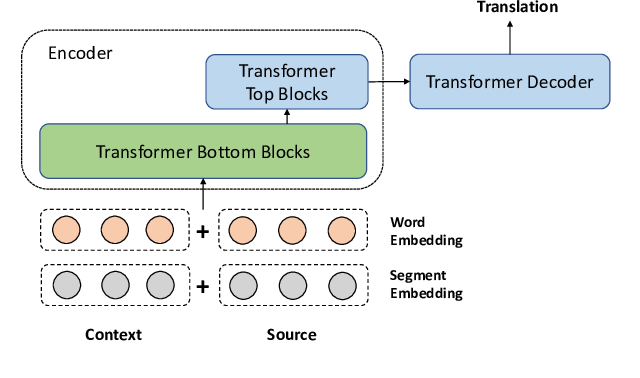

A Simple and Effective Unified Encoder for Document-Level Machine Translation

Shuming Ma, Dongdong Zhang, Ming Zhou,

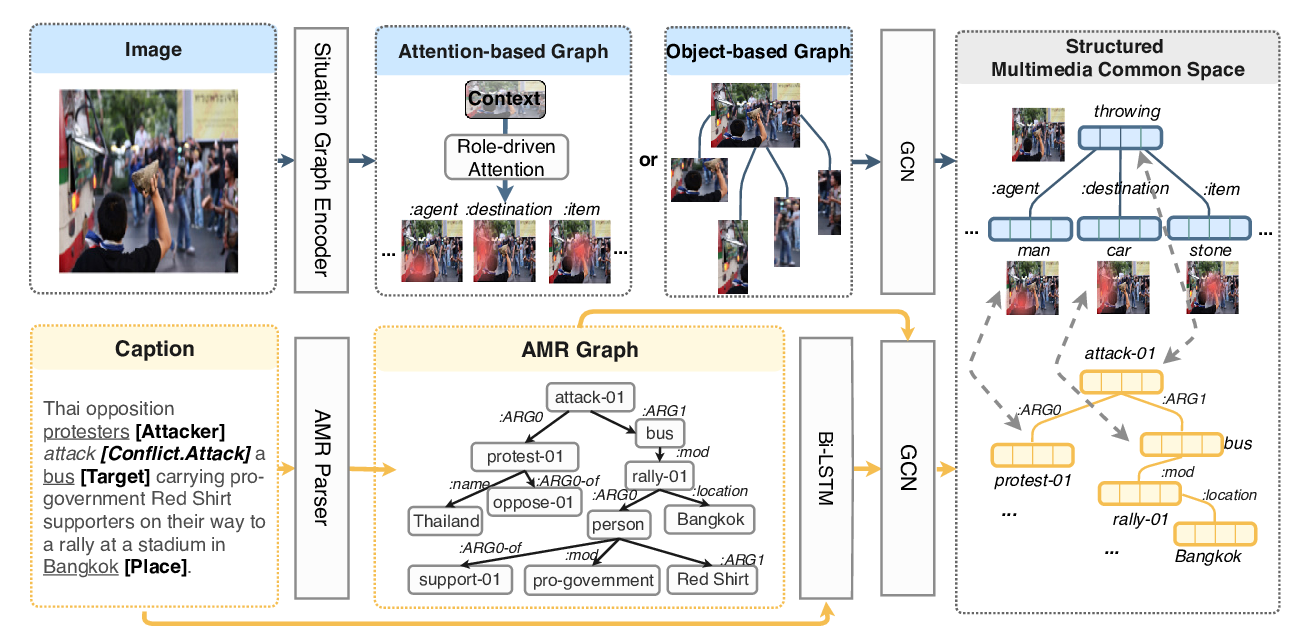

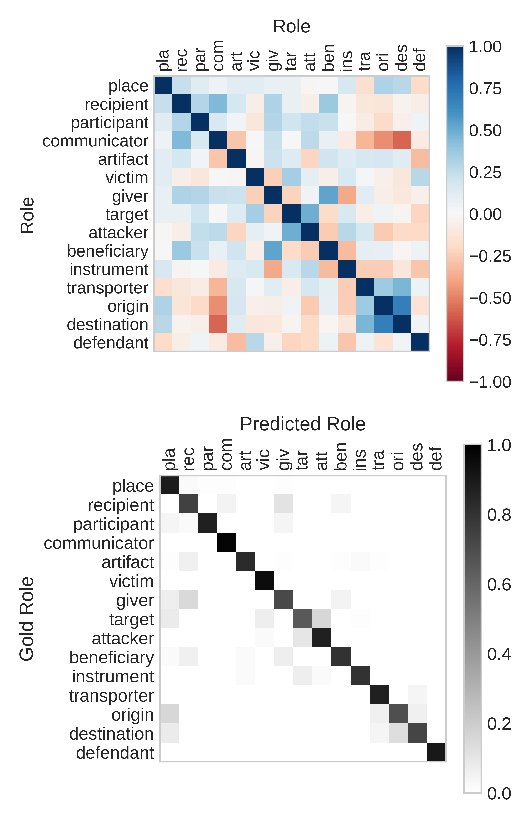

Cross-media Structured Common Space for Multimedia Event Extraction

Manling Li, Alireza Zareian, Qi Zeng, Spencer Whitehead, Di Lu, Heng Ji, Shih-Fu Chang,