A negative case analysis of visual grounding methods for VQA

Robik Shrestha, Kushal Kafle, Christopher Kanan

Language Grounding to Vision, Robotics and Beyond Short Paper

Session 14A: Jul 8

(17:00-18:00 GMT / 17:00-18:00 GMT)

Session 15A: Jul 8

(20:00-21:00 GMT / 20:00-21:00 GMT)

Abstract:

Existing Visual Question Answering (VQA) methods tend to exploit dataset biases and spurious statistical correlations, instead of producing right answers for the right reasons. To address this issue, recent bias mitigation methods for VQA propose to incorporate visual cues (e.g., human attention maps) to better ground the VQA models, showcasing impressive gains. However, we show that the performance improvements are not a result of improved visual grounding, but a regularization effect which prevents over-fitting to linguistic priors. For instance, we find that it is not actually necessary to provide proper, human-based cues; random, insensible cues also result in similar improvements. Based on this observation, we propose a simpler regularization scheme that does not require any external annotations and yet achieves near state-of-the-art performance on VQA-CPv2.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

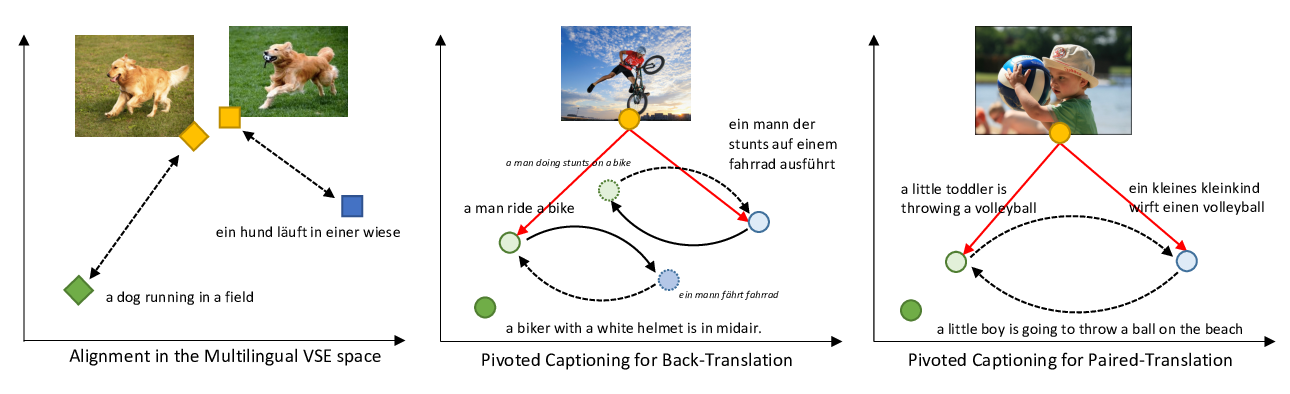

Unsupervised Multimodal Neural Machine Translation with Pseudo Visual Pivoting

Po-Yao Huang, Junjie Hu, Xiaojun Chang, Alexander Hauptmann,

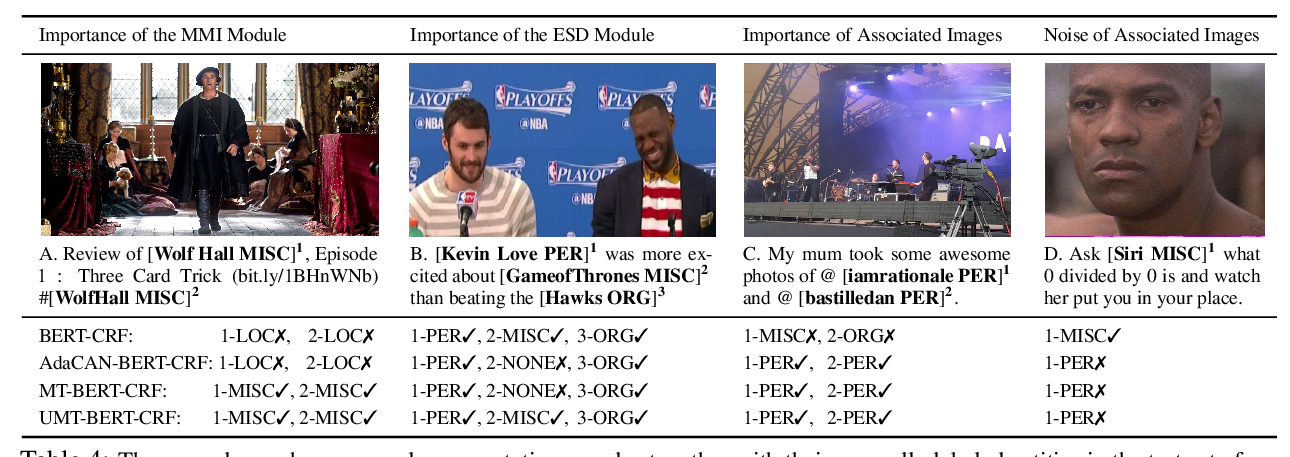

Improving Multimodal Named Entity Recognition via Entity Span Detection with Unified Multimodal Transformer

Jianfei Yu, Jing Jiang, Li Yang, Rui Xia,

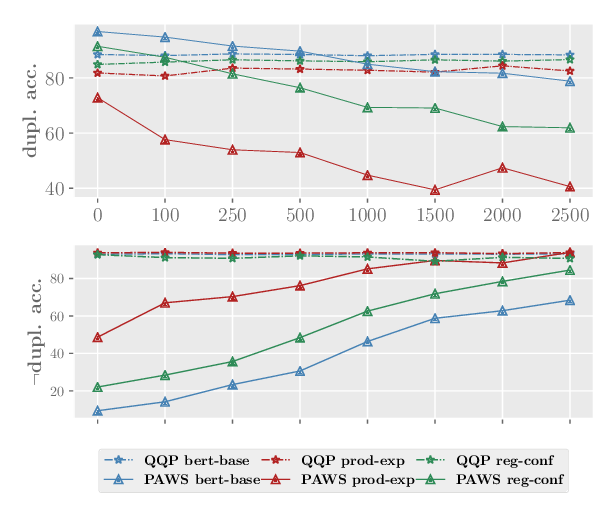

Mind the Trade-off: Debiasing NLU Models without Degrading the In-distribution Performance

Prasetya Ajie Utama, Nafise Sadat Moosavi, Iryna Gurevych,

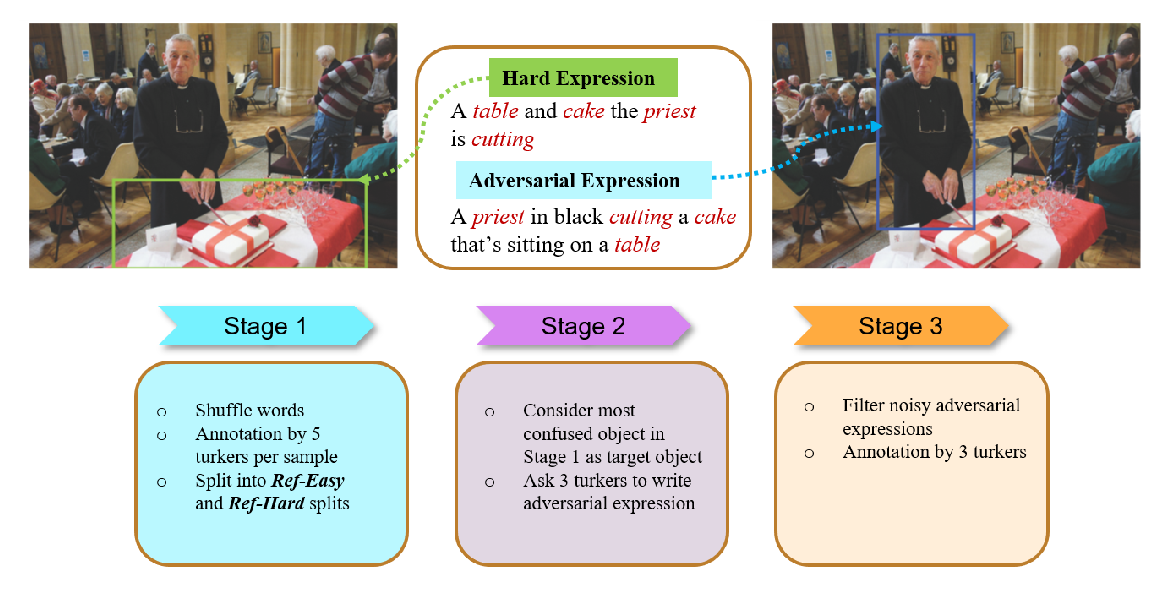

Words Aren't Enough, Their Order Matters: On the Robustness of Grounding Visual Referring Expressions

Arjun Akula, Spandana Gella, Yaser Al-Onaizan, Song-Chun Zhu, Siva Reddy,