Quantifying Attention Flow in Transformers

Samira Abnar, Willem Zuidema

Interpretability and Analysis of Models for NLP Short Paper

Session 7B: Jul 7

(09:00-10:00 GMT)

Session 8B: Jul 7

(13:00-14:00 GMT)

Abstract:

In the Transformer model, “self-attention” combines information from attended embeddings into the representation of the focal embedding in the next layer. Thus, across layers of the Transformer, information originating from different tokens gets increasingly mixed. This makes attention weights unreliable as explanations probes. In this paper, we consider the problem of quantifying this flow of information through self-attention. We propose two methods for approximating the attention to input tokens given attention weights, attention rollout and attention flow, as post hoc methods when we use attention weights as the relative relevance of the input tokens. We show that these methods give complementary views on the flow of information, and compared to raw attention, both yield higher correlations with importance scores of input tokens obtained using an ablation method and input gradients.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

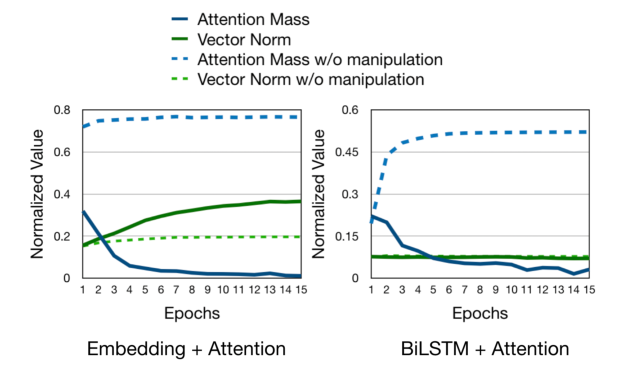

Self-Attention is Not Only a Weight: Analyzing BERT with Vector Norms

Goro Kobayashi, Tatsuki Kuribayashi, Sho Yokoi, Kentaro Inui,

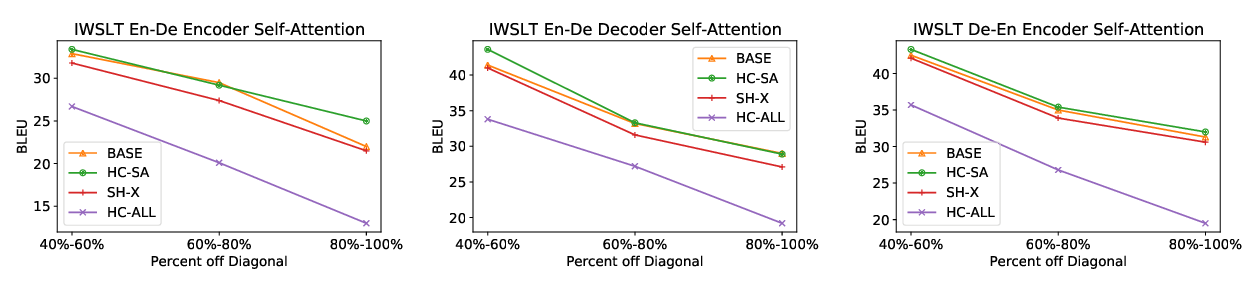

Learning to Deceive with Attention-Based Explanations

Danish Pruthi, Mansi Gupta, Bhuwan Dhingra, Graham Neubig, Zachary C. Lipton,