How much complexity does an RNN architecture need to learn syntax-sensitive dependencies?

Gantavya Bhatt, Hritik Bansal, Rishubh Singh, Sumeet Agarwal

Student Research Workshop SRW Paper

Session 7B: Jul 7

(09:00-10:00 GMT)

Session 8A: Jul 7

(12:00-13:00 GMT)

Abstract:

Long short-term memory (LSTM) networks and their variants are capable of encapsulating long-range dependencies, which is evident from their performance on a variety of linguistic tasks. On the other hand, simple recurrent networks (SRNs), which appear more biologically grounded in terms of synaptic connections, have generally been less successful at capturing long-range dependencies as well as the loci of grammatical errors in an unsupervised setting. In this paper, we seek to develop models that bridge the gap between biological plausibility and linguistic competence. We propose a new architecture, the Decay RNN, which incorporates the decaying nature of neuronal activations and models the excitatory and inhibitory connections in a population of neurons. Besides its biological inspiration, our model also shows competitive performance relative to LSTMs on subject-verb agreement, sentence grammaticality, and language modeling tasks. These results provide some pointers towards probing the nature of the inductive biases required for RNN architectures to model linguistic phenomena successfully.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

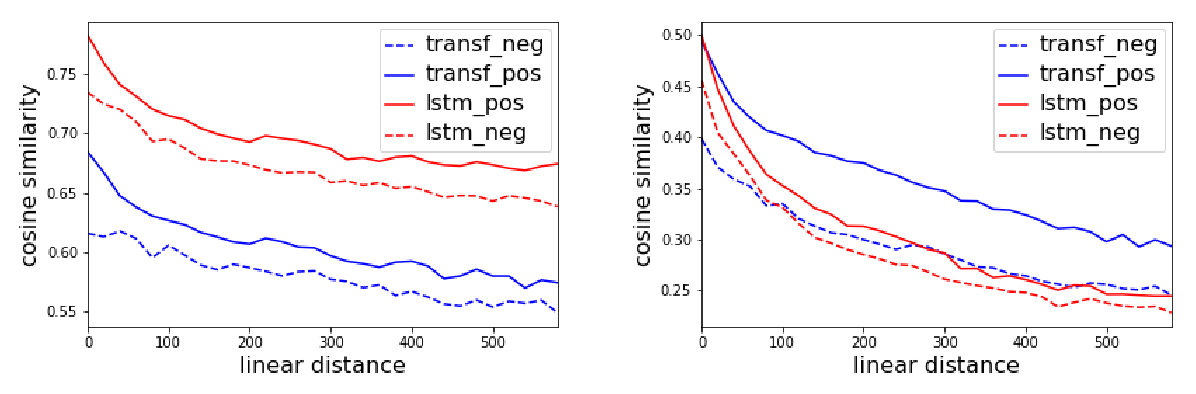

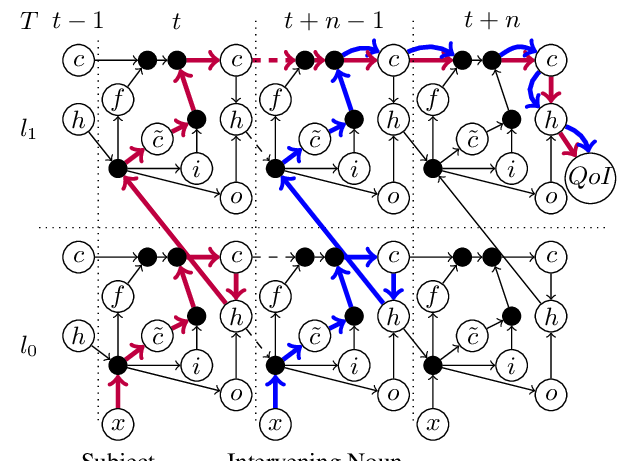

Influence Paths for Characterizing Subject-Verb Number Agreement in LSTM Language Models

Kaiji Lu, Piotr Mardziel, Klas Leino, Matt Fredrikson, Anupam Datta,

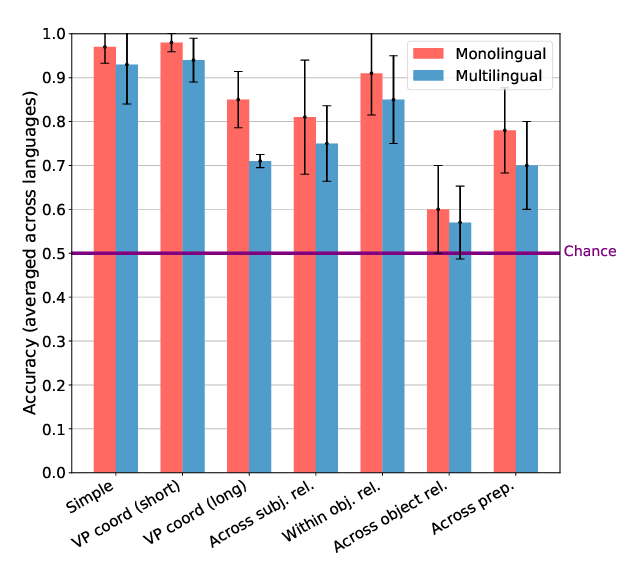

Cross-Linguistic Syntactic Evaluation of Word Prediction Models

Aaron Mueller, Garrett Nicolai, Panayiota Petrou-Zeniou, Natalia Talmina, Tal Linzen,

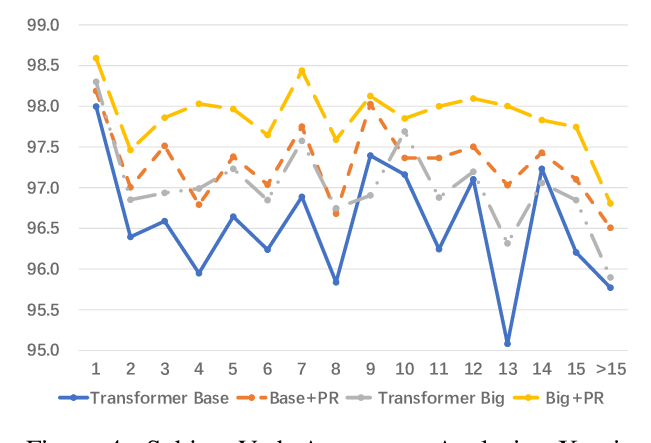

Learning Source Phrase Representations for Neural Machine Translation

Hongfei Xu, Josef van Genabith, Deyi Xiong, Qiuhui Liu, Jingyi Zhang,

Probing for Referential Information in Language Models

Ionut-Teodor Sorodoc, Kristina Gulordava, Gemma Boleda,