Interactive Task Learning from GUI-Grounded Natural Language Instructions and Demonstrations

Toby Jia-Jun Li, Tom Mitchell, Brad Myers

System Demonstrations Demo Paper

Demo Session 4B-2: Jul 7

(17:45-18:45 GMT)

Demo Session 5B-2: Jul 7

(20:45-21:45 GMT)

Abstract:

We show SUGILITE, an intelligent task automation agent that can learn new tasks and relevant associated concepts interactively from the user's natural language instructions and demonstrations, using the graphical user interfaces (GUIs) of third-party mobile apps. This system provides several interesting features: (1) it allows users to teach new task procedures and concepts through verbal instructions together with demonstration of the steps of a script using GUIs; (2) it supports users in clarifying their intents for demonstrated actions using GUI-grounded verbal instructions; (3) it infers parameters of tasks and their possible values in utterances using the hierarchical structures of the underlying app GUIs; and (4) it generalizes taught concepts to different contexts and task domains. We describe the architecture of the SUGILITE system, explain the design and implementation of its key features, and show a prototype in the form of a conversational assistant on Android.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Mapping Natural Language Instructions to Mobile UI Action Sequences

Yang Li, Jiacong He, Xin Zhou, Yuan Zhang, Jason Baldridge,

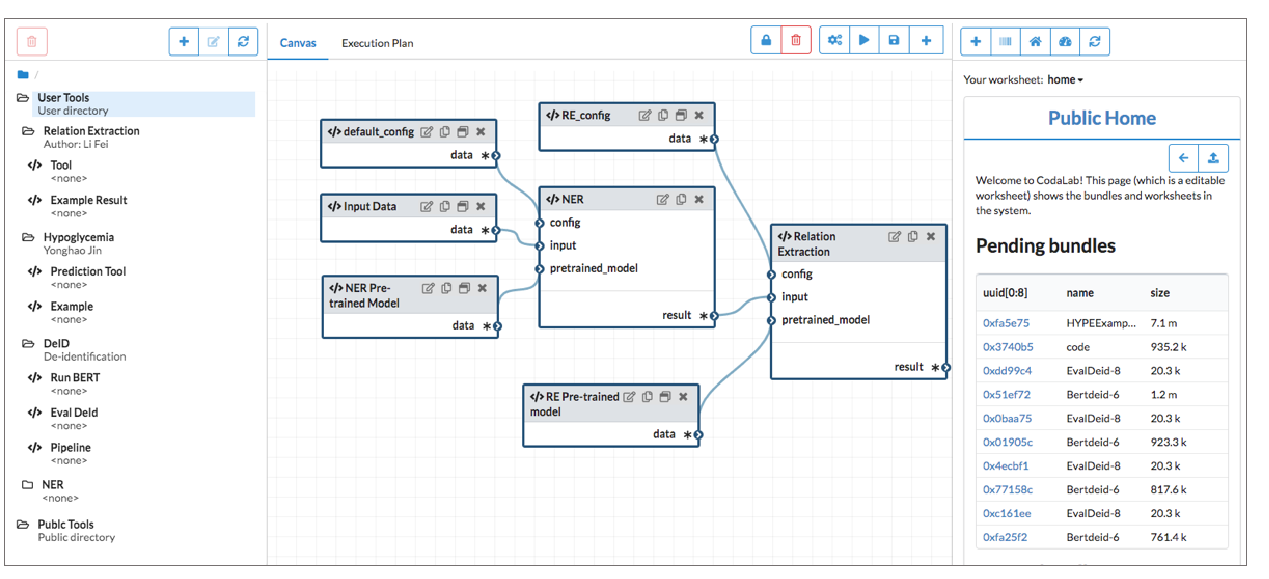

BENTO: A Visual Platform for Building Clinical NLP Pipelines Based on CodaLab

Yonghao Jin, Fei Li, Hong Yu,

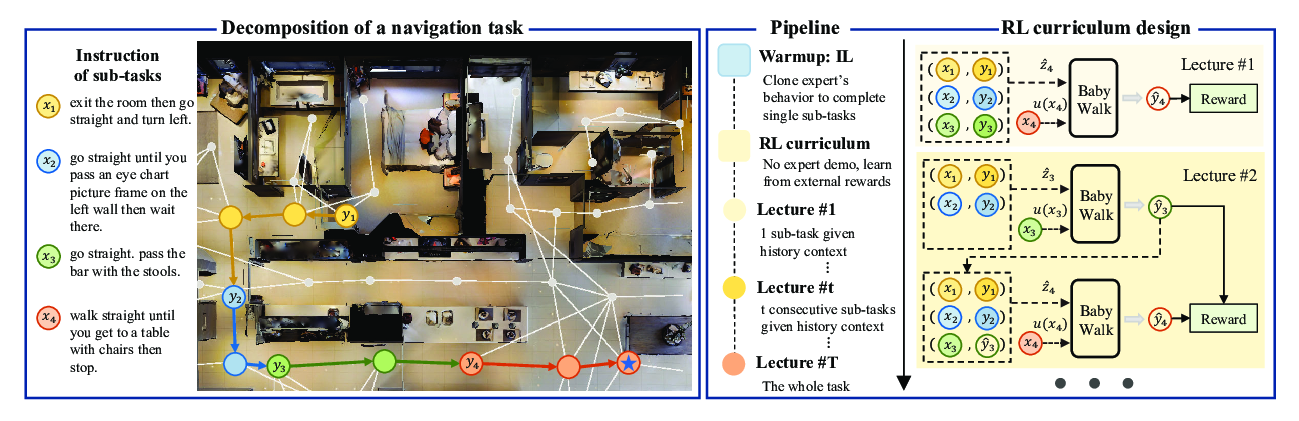

BabyWalk: Going Farther in Vision-and-Language Navigation by Taking Baby Steps

Wang Zhu, Hexiang Hu, Jiacheng Chen, Zhiwei Deng, Vihan Jain, Eugene Ie, Fei Sha,

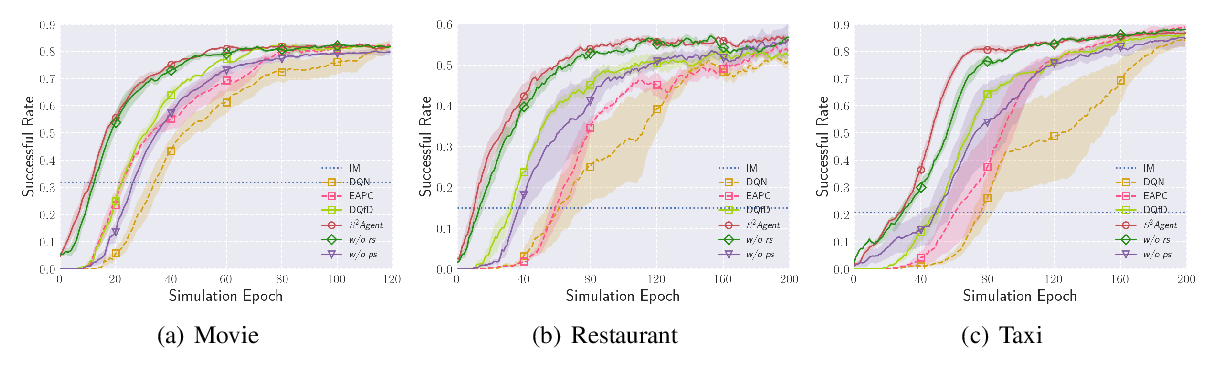

Learning Efficient Dialogue Policy from Demonstrations through Shaping

Huimin Wang, Baolin Peng, Kam-Fai Wong,