Multimodal Quality Estimation for Machine Translation

Shu Okabe, Frédéric Blain, Lucia Specia

Resources and Evaluation Short Paper

Session 2A: Jul 6

(08:00-09:00 GMT)

Session 3A: Jul 6

(12:00-13:00 GMT)

Abstract:

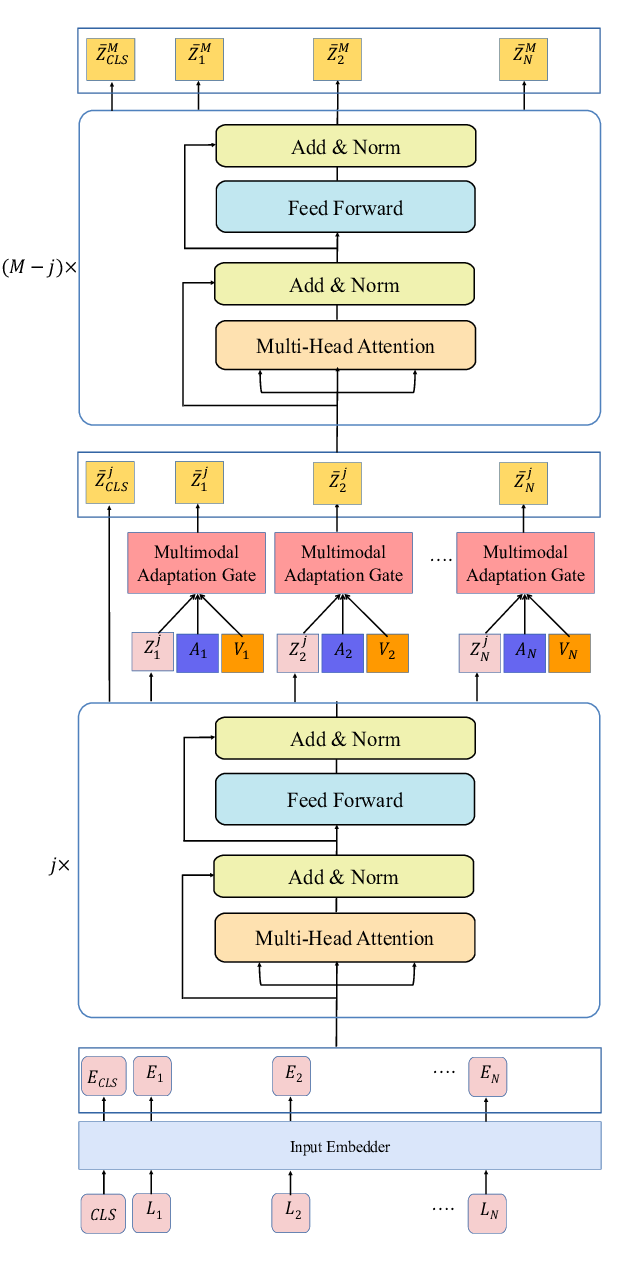

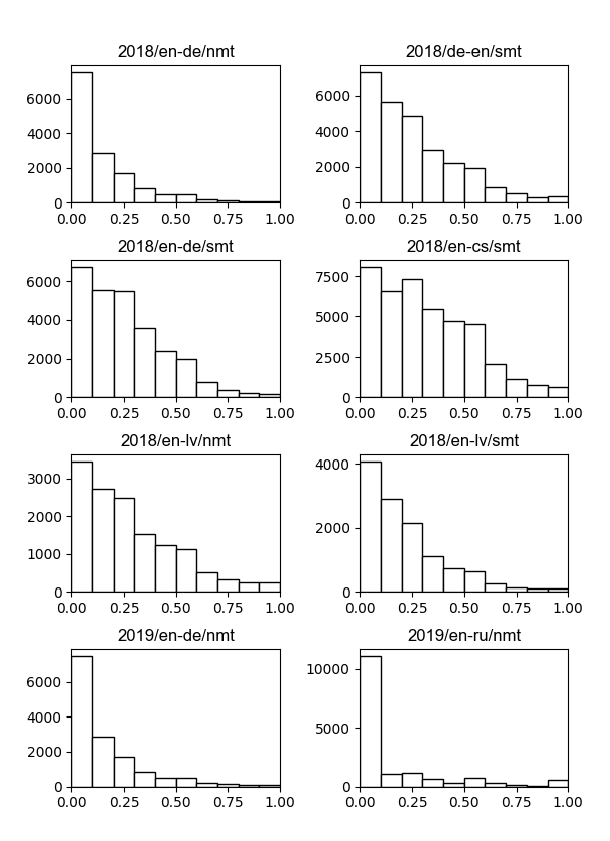

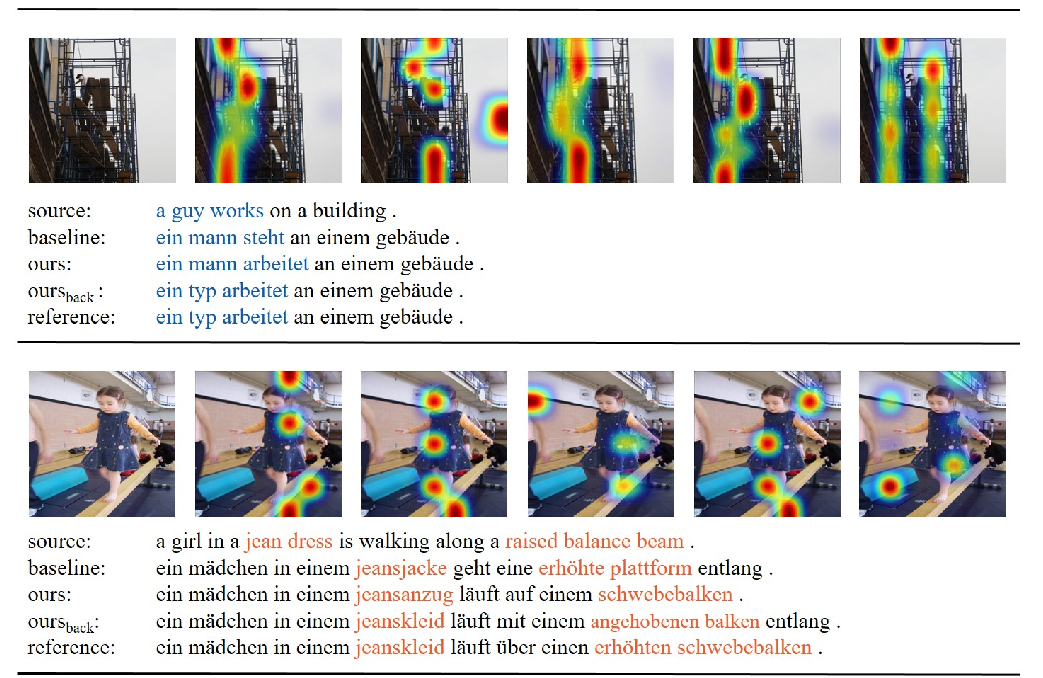

We propose approaches to Quality Estimation (QE) for Machine Translation that explore both text and visual modalities for Multimodal QE. We compare various multimodality integration and fusion strategies. For both sentence-level and document-level predictions, we show that state-of-the-art neural and feature-based QE frameworks obtain better results when using the additional modality.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

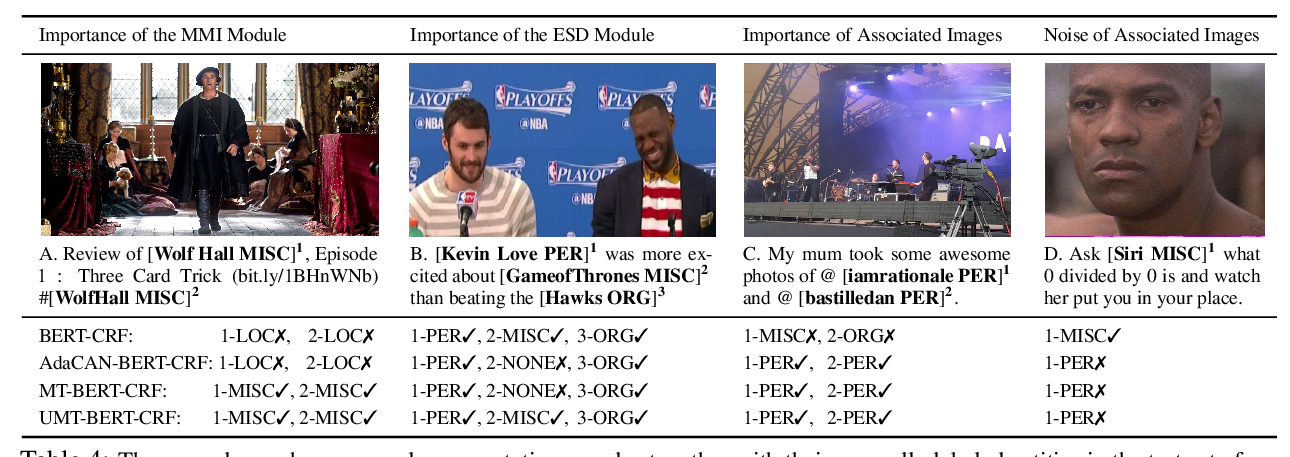

Improving Multimodal Named Entity Recognition via Entity Span Detection with Unified Multimodal Transformer

Jianfei Yu, Jing Jiang, Li Yang, Rui Xia,

Integrating Multimodal Information in Large Pretrained Transformers

Wasifur Rahman, Md Kamrul Hasan, Sangwu Lee, AmirAli Bagher Zadeh, Chengfeng Mao, Louis-Philippe Morency, Ehsan Hoque,