Bridging Anaphora Resolution as Question Answering

Yufang Hou

Discourse and Pragmatics Long Paper

Session 2B: Jul 6

(09:00-10:00 GMT)

Session 3B: Jul 6

(13:00-14:00 GMT)

Abstract:

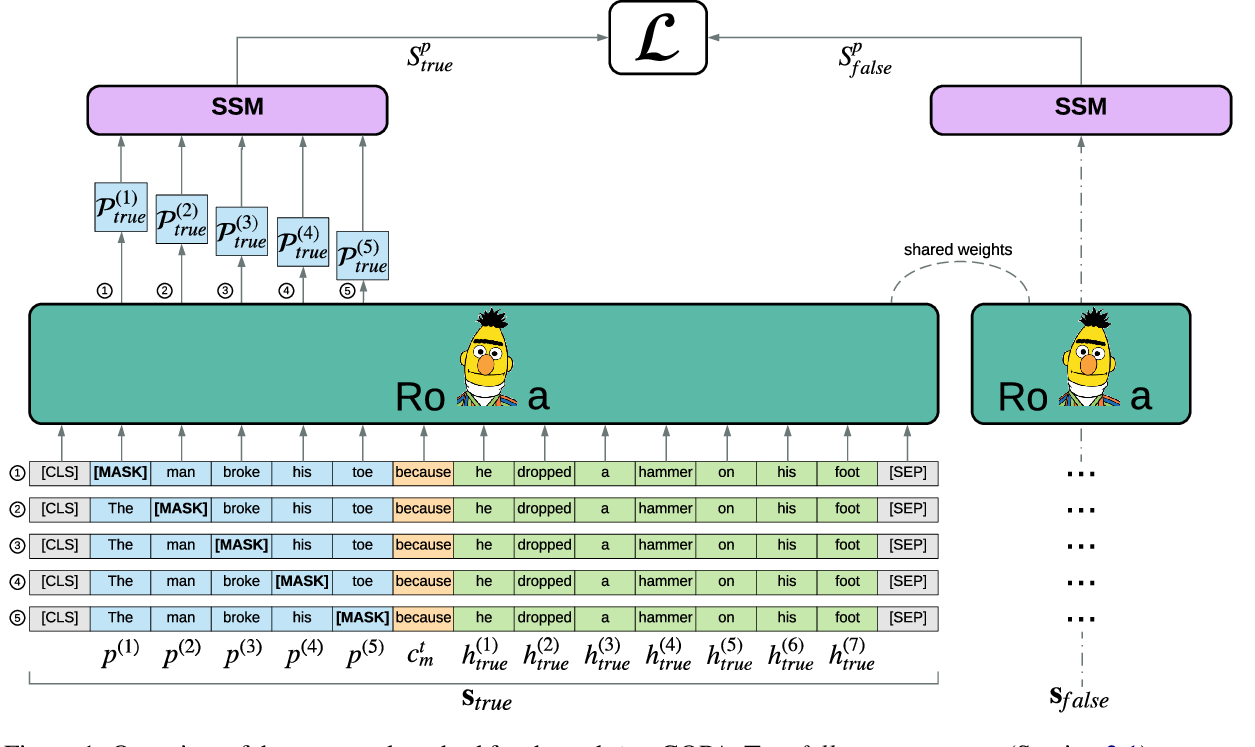

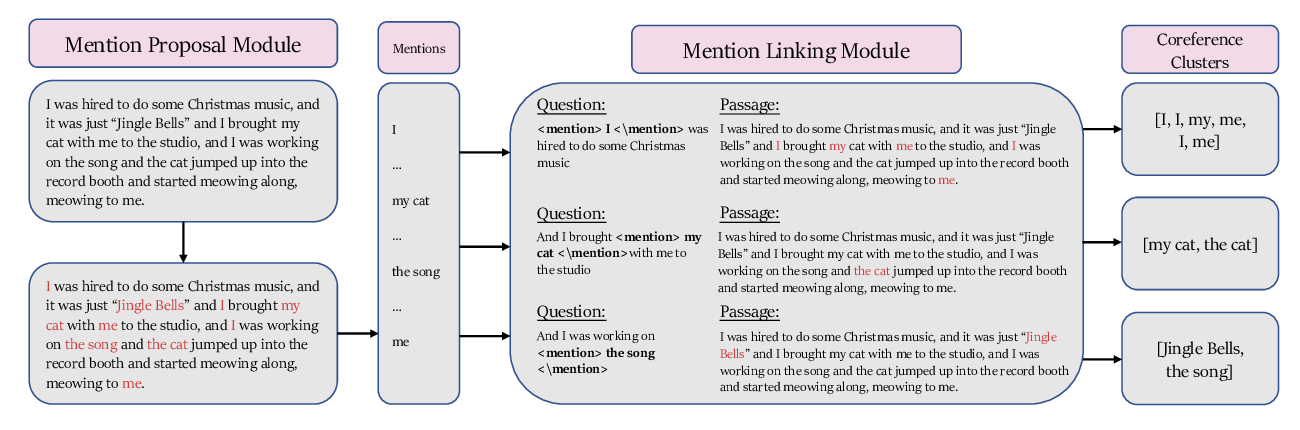

Most previous studies on bridging anaphora resolution (Poesio et al., 2004; Hou et al., 2013b; Hou, 2018a) use the pairwise model to tackle the problem and assume that the gold mention information is given. In this paper, we cast bridging anaphora resolution as question answering based on context. This allows us to find the antecedent for a given anaphor without knowing any gold mention information (except the anaphor itself). We present a question answering framework (BARQA) for this task, which leverages the power of transfer learning. Furthermore, we propose a novel method to generate a large amount of “quasi-bridging” training data. We show that our model pre-trained on this dataset and fine-tuned on a small amount of in-domain dataset achieves new state-of-the-art results for bridging anaphora resolution on two bridging corpora (ISNotes (Markert et al., 2012) and BASHI (Ro ̈siger, 2018)).

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

Pre-training Is (Almost) All You Need: An Application to Commonsense Reasoning

Alexandre Tamborrino, Nicola Pellicanò, Baptiste Pannier, Pascal Voitot, Louise Naudin,

CorefQA: Coreference Resolution as Query-based Span Prediction

Wei Wu, Fei Wang, Arianna Yuan, Fei Wu, Jiwei Li,

Transferring Monolingual Model to Low-Resource Language: The Case of Tigrinya

Abrhalei Frezghi Tela, Abraham Woubie Zewoudie, Ville Hautamäki,