ENGINE: Energy-Based Inference Networks for Non-Autoregressive Machine Translation

Lifu Tu, Richard Yuanzhe Pang, Sam Wiseman, Kevin Gimpel

Machine Translation Short Paper

Session 4B: Jul 6

(18:00-19:00 GMT)

Session 5A: Jul 6

(20:00-21:00 GMT)

Abstract:

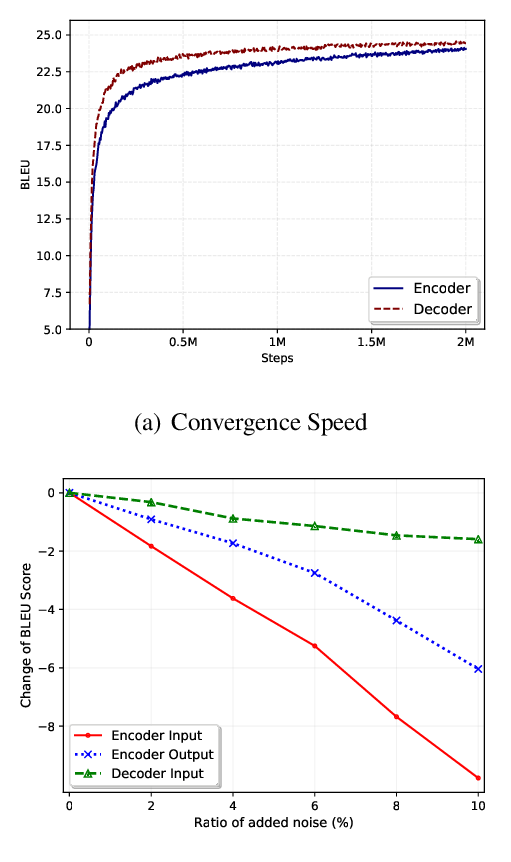

We propose to train a non-autoregressive machine translation model to minimize the energy defined by a pretrained autoregressive model. In particular, we view our non-autoregressive translation system as an inference network (Tu and Gimpel, 2018) trained to minimize the autoregressive teacher energy. This contrasts with the popular approach of training a non-autoregressive model on a distilled corpus consisting of the beam-searched outputs of such a teacher model. Our approach, which we call ENGINE (ENerGy-based Inference NEtworks), achieves state-of-the-art non-autoregressive results on the IWSLT 2014 DE-EN and WMT 2016 RO-EN datasets, approaching the performance of autoregressive models.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

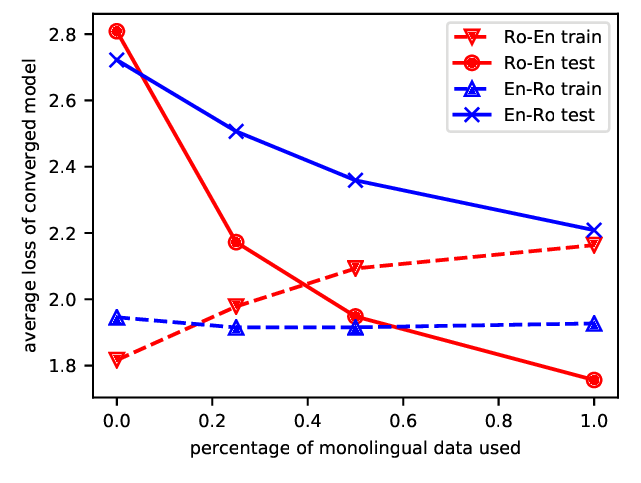

Improving Non-autoregressive Neural Machine Translation with Monolingual Data

Jiawei Zhou, Phillip Keung,

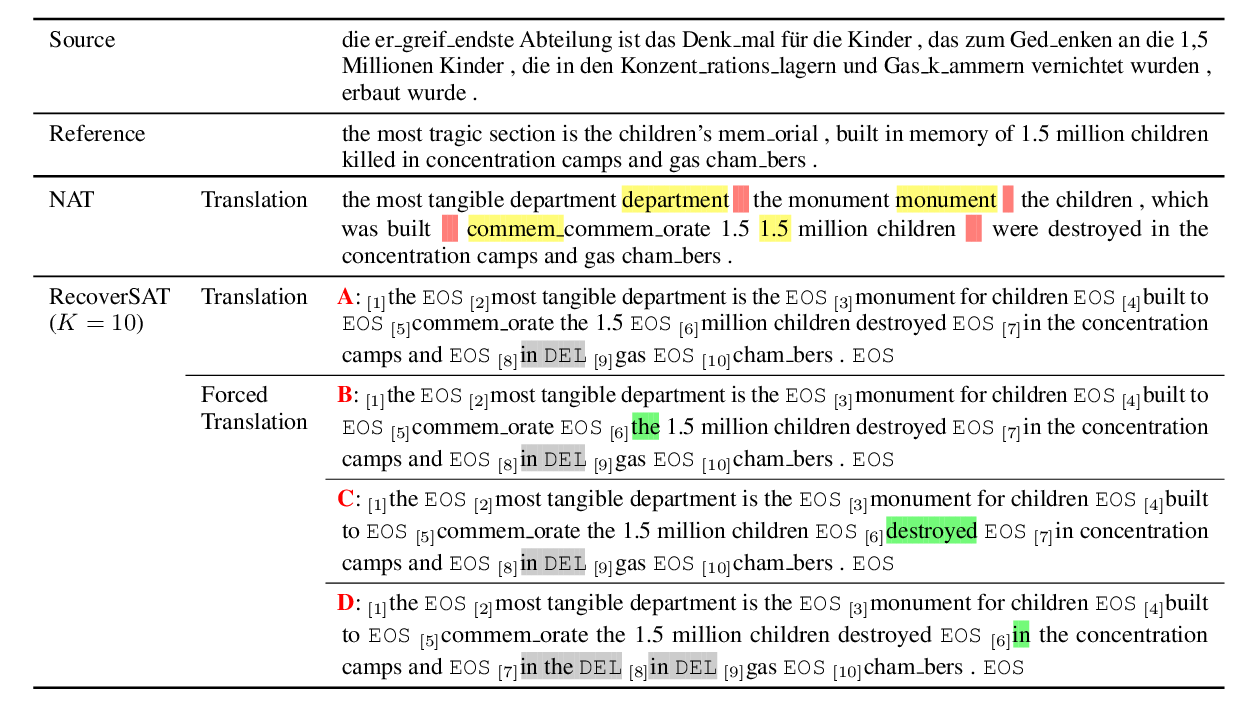

Learning to Recover from Multi-Modality Errors for Non-Autoregressive Neural Machine Translation

Qiu Ran, Yankai Lin, Peng Li, Jie Zhou,

Jointly Masked Sequence-to-Sequence Model for Non-Autoregressive Neural Machine Translation

Junliang Guo, Linli Xu, Enhong Chen,

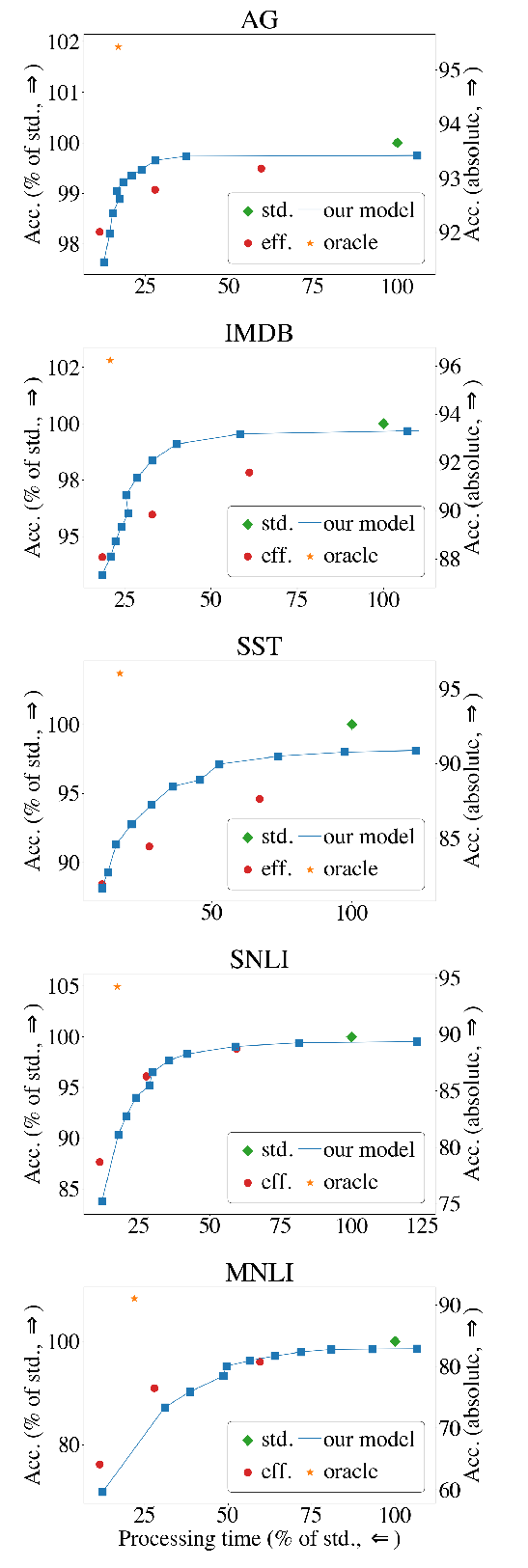

The Right Tool for the Job: Matching Model and Instance Complexities

Roy Schwartz, Gabriel Stanovsky, Swabha Swayamdipta, Jesse Dodge, Noah A. Smith,