Knowledge Supports Visual Language Grounding: A Case Study on Colour Terms

Simeon Schüz, Sina Zarrieß

Language Grounding to Vision, Robotics and Beyond Short Paper

Session 11B: Jul 8

(06:00-07:00 GMT)

Session 15A: Jul 8

(20:00-21:00 GMT)

Abstract:

In human cognition, world knowledge supports the perception of object colours: knowing that trees are typically green helps to perceive their colour in certain contexts. We go beyond previous studies on colour terms using isolated colour swatches and study visual grounding of colour terms in realistic objects. Our models integrate processing of visual information and object-specific knowledge via hard-coded (late) or learned (early) fusion. We find that both models consistently outperform a bottom-up baseline that predicts colour terms solely from visual inputs, but show interesting differences when predicting atypical colours of so-called colour diagnostic objects. Our models also achieve promising results when tested on new object categories not seen during training.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

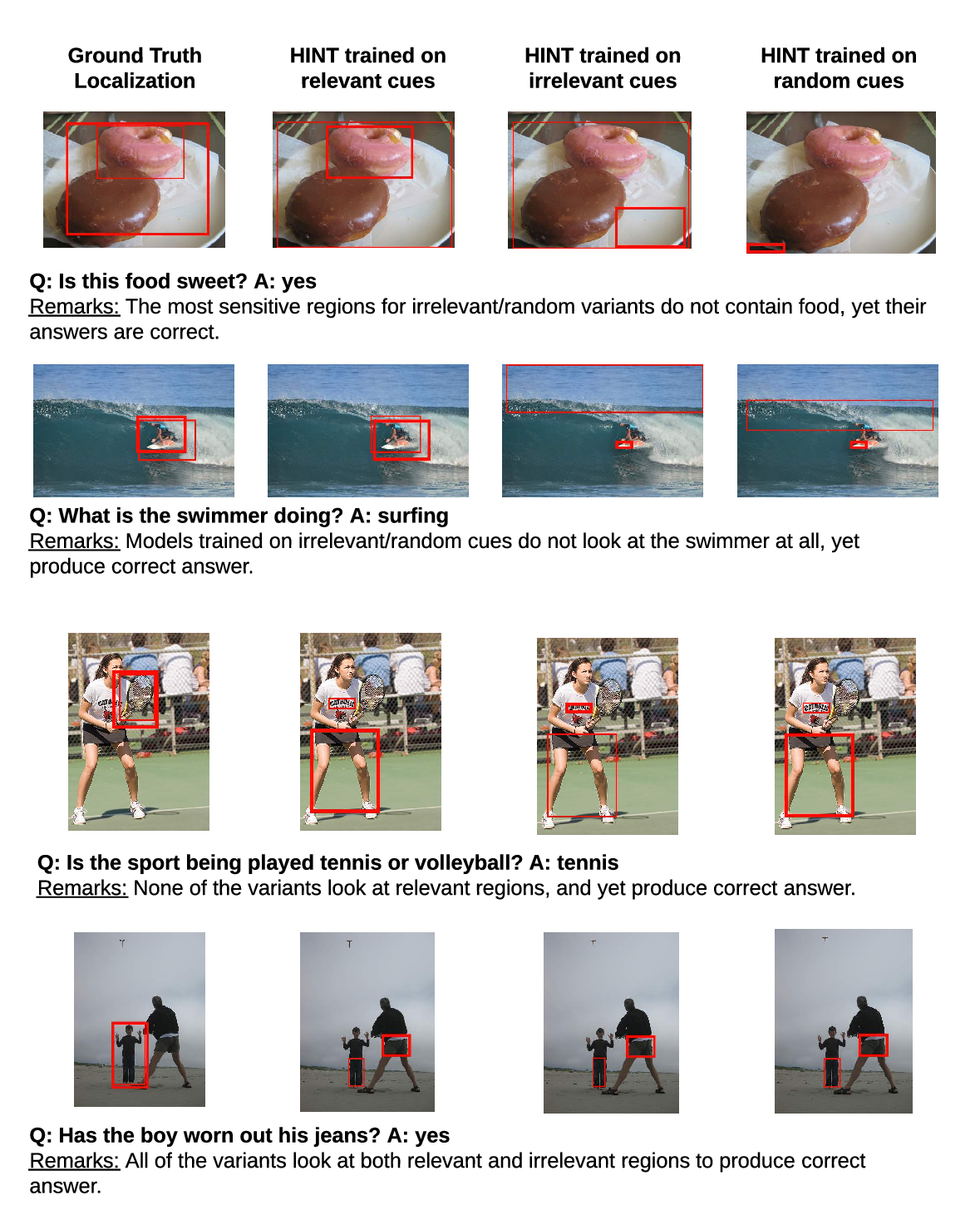

A negative case analysis of visual grounding methods for VQA

Robik Shrestha, Kushal Kafle, Christopher Kanan,

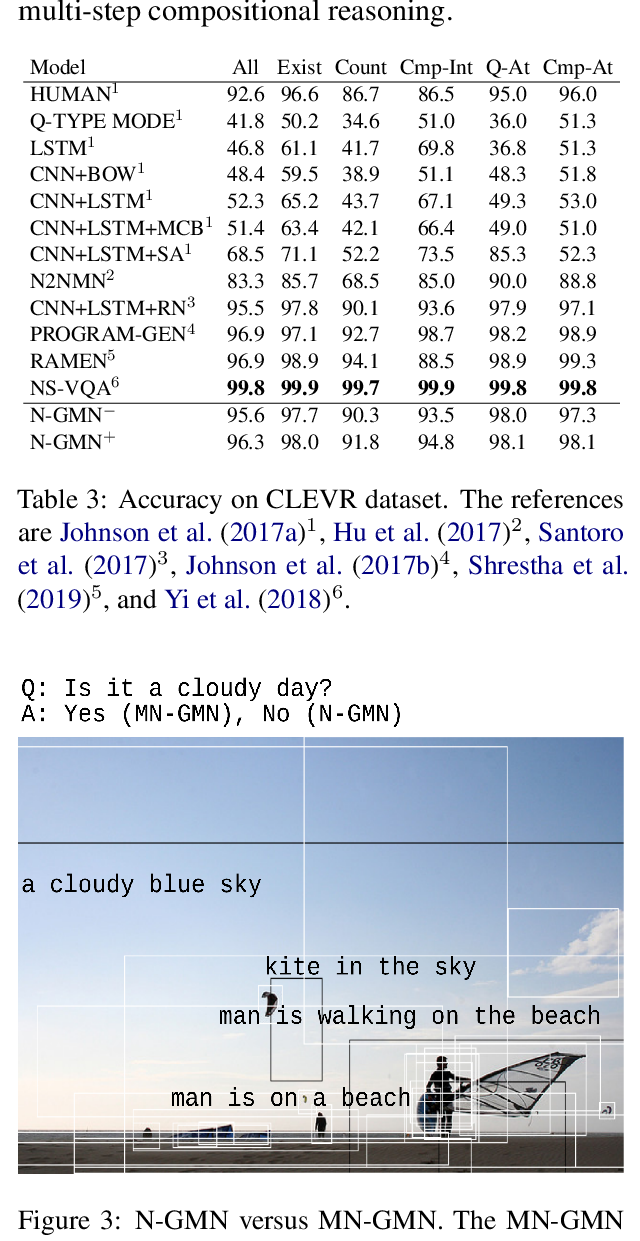

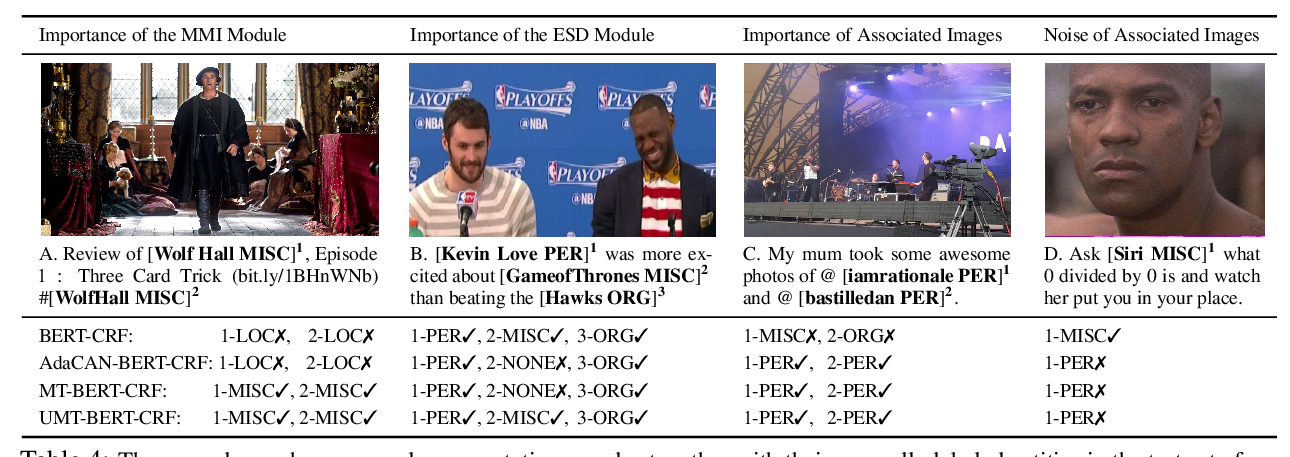

Improving Multimodal Named Entity Recognition via Entity Span Detection with Unified Multimodal Transformer

Jianfei Yu, Jing Jiang, Li Yang, Rui Xia,

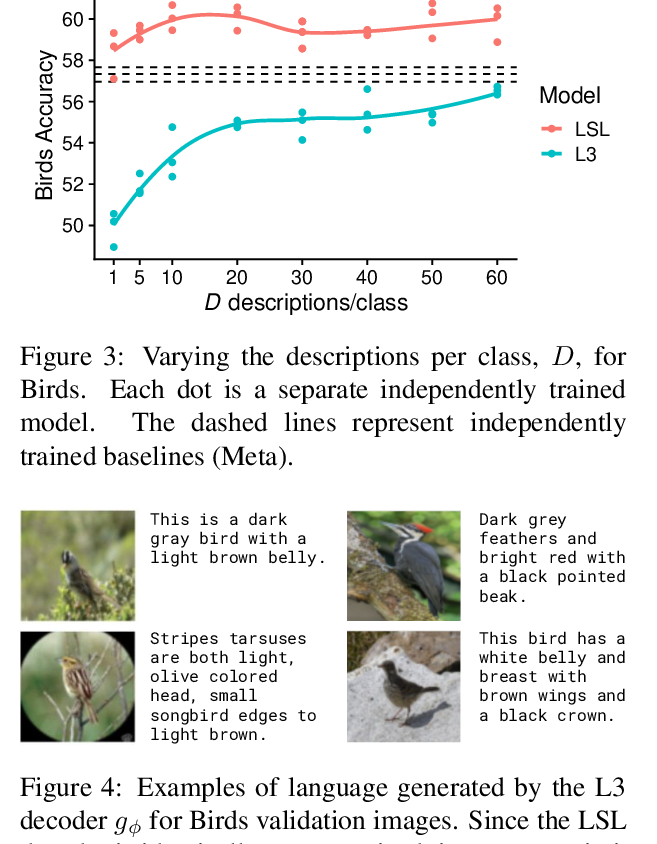

Shaping Visual Representations with Language for Few-Shot Classification

Jesse Mu, Percy Liang, Noah Goodman,