Improving Entity Linking through Semantic Reinforced Entity Embeddings

Feng Hou, Ruili Wang, Jun He, Yi Zhou

Information Extraction Short Paper

Session 12A: Jul 8

(08:00-09:00 GMT)

Session 13A: Jul 8

(12:00-13:00 GMT)

Abstract:

Entity embeddings, which represent different aspects of each entity with a single vector like word embeddings, are a key component of neural entity linking models. Existing entity embeddings are learned from canonical Wikipedia articles and local contexts surrounding target entities. Such entity embeddings are effective, but too distinctive for linking models to learn contextual commonality. We propose a simple yet effective method, FGS2EE, to inject fine-grained semantic information into entity embeddings to reduce the distinctiveness and facilitate the learning of contextual commonality. FGS2EE first uses the embeddings of semantic type words to generate semantic embeddings, and then combines them with existing entity embeddings through linear aggregation. Extensive experiments show the effectiveness of such embeddings. Based on our entity embeddings, we achieved new sate-of-the-art performance on entity linking.

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

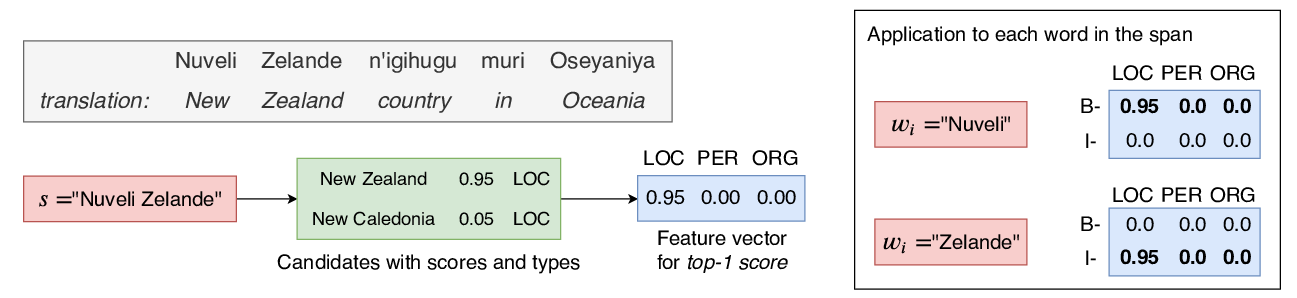

Soft Gazetteers for Low-Resource Named Entity Recognition

Shruti Rijhwani, Shuyan Zhou, Graham Neubig, Jaime Carbonell,

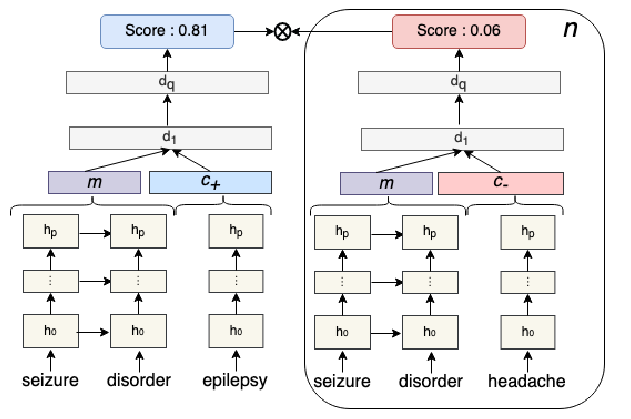

Clinical Concept Linking with Contextualized Neural Representations

Elliot Schumacher, Andriy Mulyar, Mark Dredze,

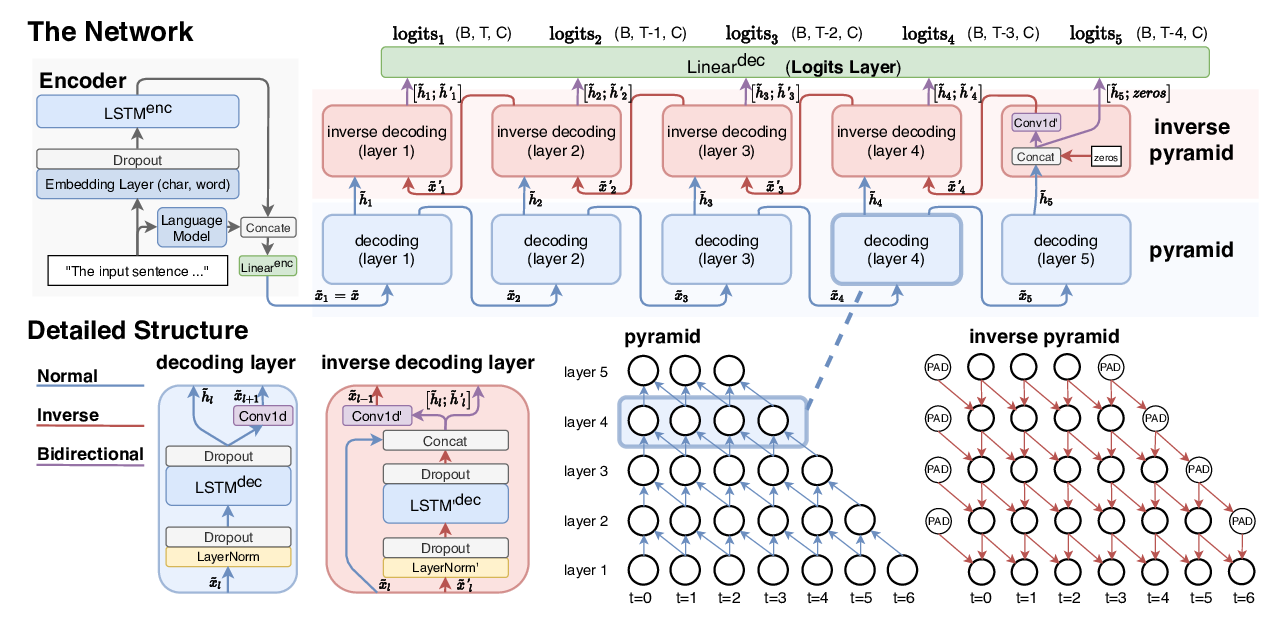

Pyramid: A Layered Model for Nested Named Entity Recognition

Jue Wang, Lidan Shou, Ke Chen, Gang Chen,