Predicting Performance for Natural Language Processing Tasks

Mengzhou Xia, Antonios Anastasopoulos, Ruochen Xu, Yiming Yang, Graham Neubig

NLP Applications Long Paper

Session 14B: Jul 8

(18:00-19:00 GMT)

Session 15B: Jul 8

(21:00-22:00 GMT)

Abstract:

Given the complexity of combinations of tasks, languages, and domains in natural language processing (NLP) research, it is computationally prohibitive to exhaustively test newly proposed models on each possible experimental setting. In this work, we attempt to explore the possibility of gaining plausible judgments of how well an NLP model can perform under an experimental setting, without actually training or testing the model. To do so, we build regression models to predict the evaluation score of an NLP experiment given the experimental settings as input. Experimenting on~9 different NLP tasks, we find that our predictors can produce meaningful predictions over unseen languages and different modeling architectures, outperforming reasonable baselines as well as human experts. %we represent experimental settings using an array of features. Going further, we outline how our predictor can be used to find a small subset of representative experiments that should be run in order to obtain plausible predictions for all other experimental settings. (Code, data and logs are publicly available at https://github.com/xiamengzhou/NLPerf.)

You can open the

pre-recorded video

in a separate window.

NOTE: The SlidesLive video may display a random order of the authors.

The correct author list is shown at the top of this webpage.

Similar Papers

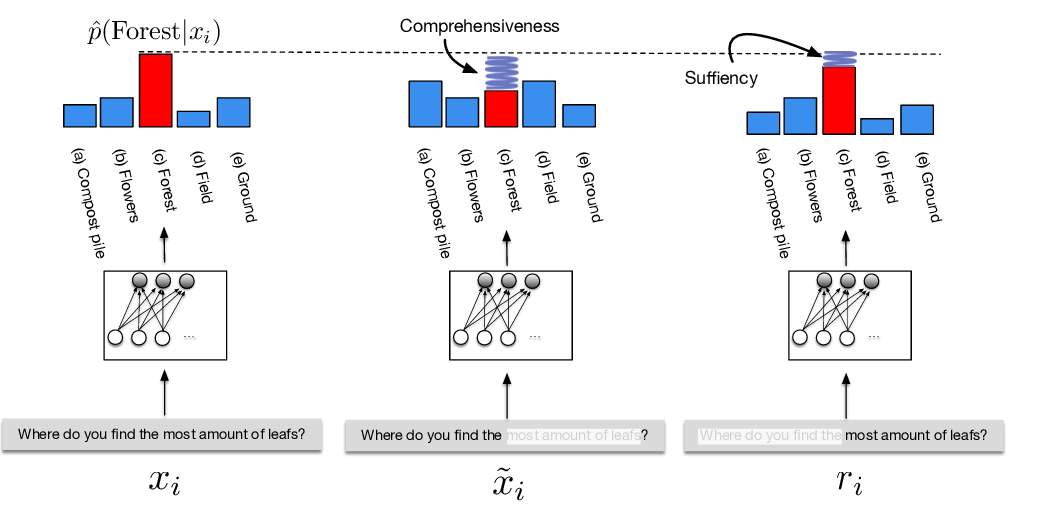

ERASER: A Benchmark to Evaluate Rationalized NLP Models

Jay DeYoung, Sarthak Jain, Nazneen Fatema Rajani, Eric Lehman, Caiming Xiong, Richard Socher, Byron C. Wallace,

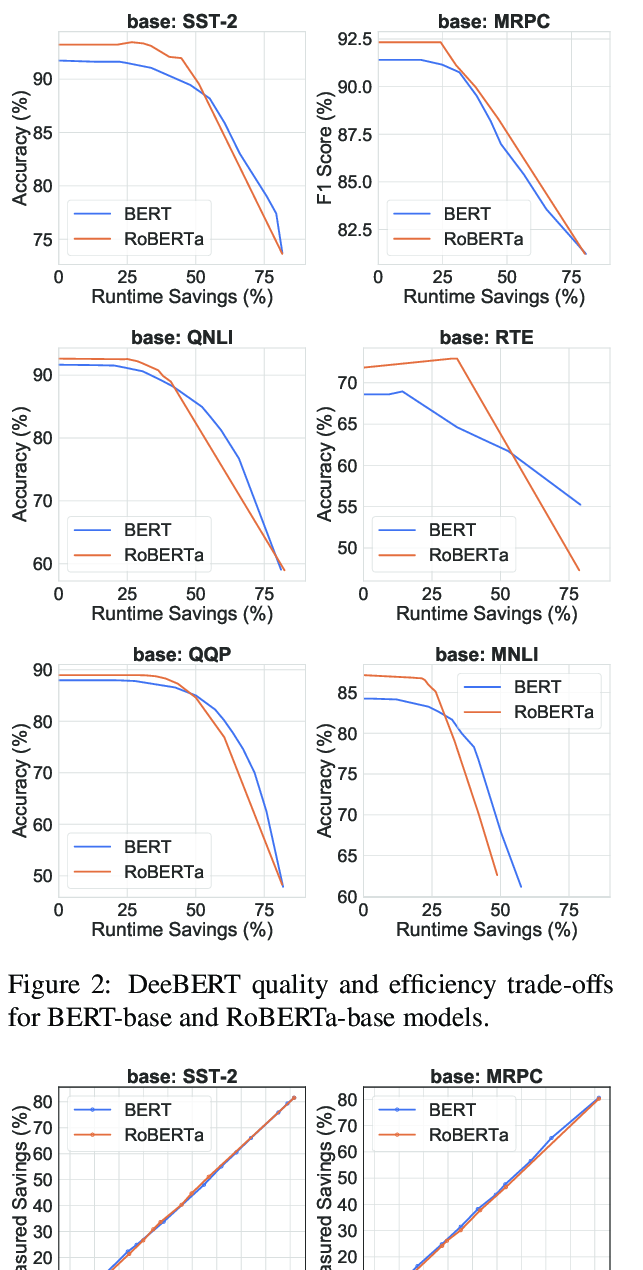

DeeBERT: Dynamic Early Exiting for Accelerating BERT Inference

Ji Xin, Raphael Tang, Jaejun Lee, Yaoliang Yu, Jimmy Lin,

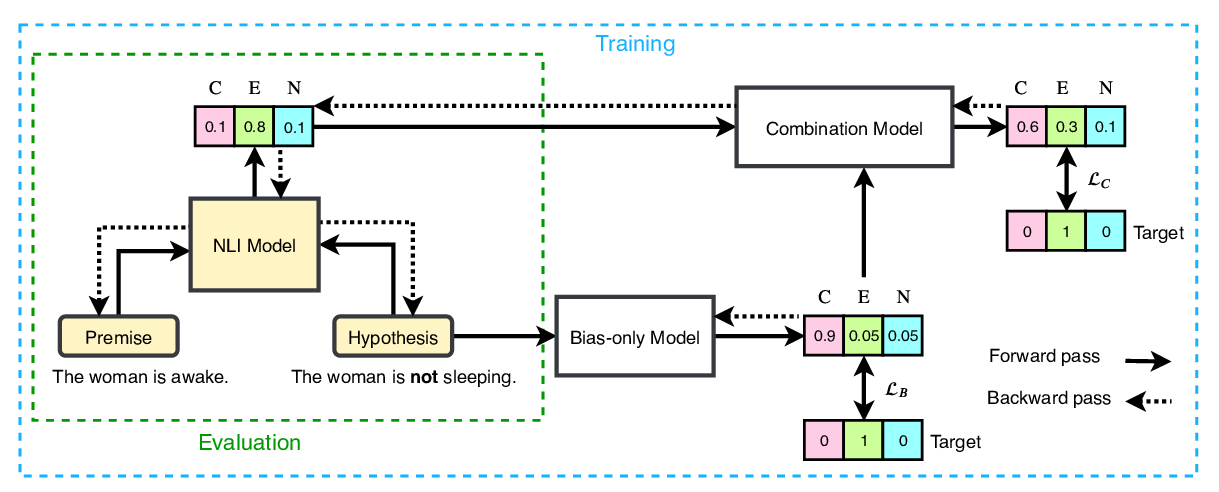

End-to-End Bias Mitigation by Modelling Biases in Corpora

Rabeeh Karimi Mahabadi, Yonatan Belinkov, James Henderson,

Information-Theoretic Probing for Linguistic Structure

Tiago Pimentel, Josef Valvoda, Rowan Hall Maudslay, Ran Zmigrod, Adina Williams, Ryan Cotterell,